Elizabeth Han – Personal Blog

- Devika Pillai

- Feb 3, 2021

- 18 min read

Hi, I’m Elizabeth 😊

I study Design at Carnegie Mellon, with minors in HCI and Intelligent Environments. I strive to be a designer that embeds meaningful interactions in all of our perceived “spaces.” In my projects, I try to achieve this at multiple scales, connecting people to their loved ones, their environments, and ultimately greater purposes.

Project 1

Reflection on “A Survey of Presence and Related Concepts”

After reading the paper “A Survey of Presence and Related Concepts,” I was intrigued by the dense and methodical approach to evaluating the quality of a virtual environment. My process of design is guided by user research, but I had never thought to apply such a vast and debated range of concepts, such as place illusion, plausibility illusion, coherence, immersion… and many more. In the context of our project, I wonder to what extent co-presence or social presence the experience can have, as well as what mediums can achieve the means. From a cognitive standpoint, the paper states that even a phone call can provide ample feelings of social presence despite its simple form of communication. This makes me think that social connections don’t necessarily require the “immersion” we imagine when we think of virtual reality spaces, but rather need a simple but effective method of connectivity, such as the heartbeat band we saw on the first day of class. I also became interested in the idea of transportation to bring an advanced feeling of embodiment. For example is it possible to design an experience to bring back feelings of body agency to patients who suffer from body paralysis? Furthermore, how does the feeling of “being there” differ across different accessibility levels? Lastly, I wonder how and why the current “virtual reality experience” through fully immersive headsets became predominant. Visual stimuli seem to be only one of the many parts of an immersive experience, yet designs in the consumer market seem to be dominated by the fidelity of the visual perception, rather than considering the more integral parts of what makes an appropriate experience as dissected by this paper.

Reflection on “Social immersive media: pursuing best practices for multi-user interactive camera/projector exhibits”

The paper “Social immersive media: pursuing best practices for multi-user interactive camera/projector exhibits” shares various design strategies to optimize a socially immersive media: “an interaction in a shared social space using a person’s entire body as “input device” unencumbered by electronics or props.” While E studio projects focus a lot on these full-body interactions mentioned in the paper, there have been very few tips and tricks that I could find online while working on projects. As such, I was pleasantly surprised to see a number of helpful guidelines for creating a seamless interactive experience. For the section on narrative models, it was interesting how the level of abstraction decreased throughout the four examples: experiential, performance, episodic, and game. I’m personally a fan of conceptual experiences like the experiential narrative example of Boundary Functions, as it employs very simple graphics and relies heavily on participatory conditions. (Caveat though is that maybe I’m just one of the adult audiences that try to intellectualize the experience, rather than accept it viscerally.) I wonder to what extent abstraction will play a role in our projects moving forward — how much freedom can we yield to maximize the presence of users? From a technical standpoint, I was happy to learn that even the slightest breaks in the illusion (such as, the projection abruptly changing from one episode to another) can ruin the fluidity of the experience. It makes me think that I have to test out my interactions ahead of time for the project, rather than solidify the interactions last minute, to avoid unnecessary clunks in the interactions…

Project 2

3/29/21

Reflection on “AI Impacts Design” by Molly Wright Steenson

Molly’s talk illustrates how architects in history have influenced the design of artificial intelligence as we know it today. The talk revealed to me that the core of AI is not novel at all – what makes it novel is its sophisticated execution. The idea of patterns, cybernetics, and even augmented reality were established through works that long preceded the AI, leading to the same questions that still linger in researchers’ heads today: “Technology is the answer, but what was the question?” It seems that the AI has evolved so fast to tackle so many problems, yet the placement of ethical regulations has yet to catch up. Nicholas Negorponte noted in the late 1980s of how AI is “between oppressive and exciting,” yet we still struggle to prevent AI usage from being oppressive against the human condition. I wonder how the works of humanities regarding AI ethics and the works of technology that develops the AI can be weaved together more effectively to make AI a force for good.

If the system that builds an AI is so complex, hidden, and exploitative… what will motivate consumers to counter-police the system? Materializing the hidden labor? Demonstrating how the system uses your speech as training data? Where do we draw the line between helpful technology and one that impedes on our ethics?

It’s interesting that the early development of AI was driven by military, government, and geopolitics… rather than through a commercial boom as we see it today. I wonder if the evolution of AI will be met with just as many disappointments in Boom 3, although the battle now seems to be about stunting its growth in necessary places to prevent misuse.

I think this framework is helpful in building our project 2, as the six categories seem to define what an intelligent environment should be capable of. I’m particularly interested in ‘objects with digital shadows’ and ‘objects that subvert,’ as a good design should be able to do both in order to make our lives easier… without being invasive. A design that offers a way in, as well as a clear way out, seems to be the most ethical approach towards intelligence.

Initial Ideas

Research question:

How might we make intelligence systems more transparent? (What does it know about us? What can it do about our lives? How can we hide from its omnipresence? What can we control? Who is contributing to the labor? How is the data collected? How is the data interpreted?)

Augmenting our environments with our data?

Ideas

physicalizing/augmenting data collection process

the more you walk, the more you calibrate data about your environment

labor and ai

making labor more transparent

ex) connecting you to mechanical turk workers when you talk with an intelligent agent

representation: how can missing datasets be filled in?

who are you in the overall dataset?

ex) asian american, female, pittsburgh…

how are you being ~oppressed~?

ai shows the decision it makes, based on what it knows about you.

because you don’t fit in with the majority, your decision is being dictated by something else…

intelligence embedded interaction: using our phones as controllers of the world — point to things and get data/informationthis is a tree, this is a mountain

role of ai in our social relationships (extension from project 1)…

calibrating the agent based on how you want it to react, with various personalities

Conversnitch

2013-2014 with Brian House An eavesdropping lamp/lightbulb that livetweets conversations, using a small microphone with a Raspberry Pi that records audio snippets and uploads them to Mechanical Turk for automated transcription.

WorldGaze

ListenLearner

HYPER-REALITY

3/31/21

Reflection on ‘Designing the Behavior of Interactive Objects’

The framework of personalities to dictate a design was introduced through this reading. I think this is pretty future-thinking, as more and more AI driven services will become anthropomorphized to reflect the human-like actions it can take. I think the “aesthetic” approach as the author describes it will eventually be considered a functional one, as thinking through the personality is crucial in many settings, such as healthcare. I wonder what types of attributes can be manipulated when the final product is not robotic. Speech? Animation? Etc?

Reflection on ‘IoT Data in the Home: Observing Entanglements and Drawing New Encounters’

This was an interesting insight into how people want their IoT data to be experienced in the home. It reminded me of my sophomore year project, where I wanted to delve into visualizing energy use to promote sustainable behaviors… yet it was really hard to make data feel approachable in human terms. Based on the paper, it seems like there was an overwhelming consensus among participants that they don’t trust their IoT Devices, yet looking at hard data feels inhumane and unhomely. Even data representation need to be injected wisely into a person’s mental model/day, so that it can be easily digested. The use of tarot cards and epic were particularly interesting, as the strategies aim to provide imaginative narratives through data, rather than an endless list of graphs (which nobody wants). It revealed to me how storytelling is impactful when dealing with dry data…

It also raised the question of: who controls the data? One participant noted how they felt too powerful for being able to manipulate their data, as if they can change the course of history… How should designers balance user autonomy, integrity, as well as privacy? In what ways can a flexible system be prone to misuse?

Case Study: ‘Better Human’ by Yedan Qian

“Better Human is a speculative design imagining a world where native technologies are being widely used to manipulate people’s sensation and mind for wellbeing purpose. It raises the question of whether human should rely on technology to gain self awareness and self control. Should we give up control and accept human-augmentation for good reasons?”

The system works in three parts: reflector, troublemaker, and satisfier. (As it functions as a design fiction, it gives a picture of how the system would work, rather than actual explain the mechanics behind it.) The user engages with the design through physical objects/representations, which again, serve more as design metaphors than actual designs.

Overall, the project serves as a provocation of how much control we want our devices to have over us. I’m inspired by its provocative element, use of narrative, and physical prototyping to explain the idea behind it.

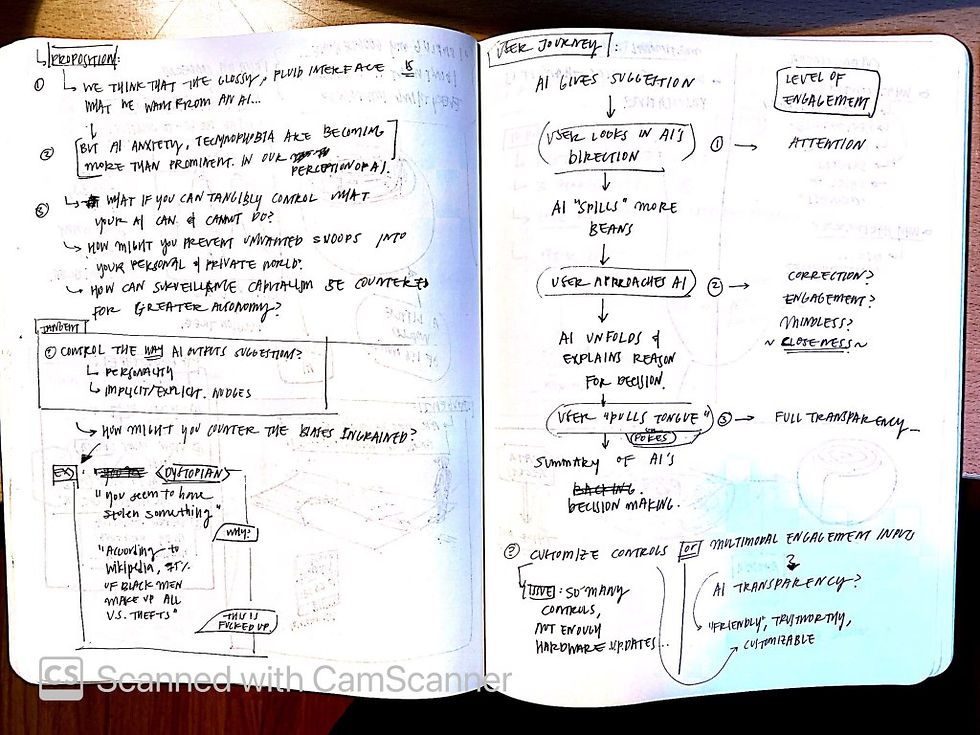

Final Research Question

How do humans gain control in a world dominated by AI?

When thinking of AI’s integration into our society, we imagine a world where decisions are dictated by machines, filled with loopholes and inhumane decisions. Such technology has already seeped into our lives in the present-day, where facial recognition promotes racial profiling, and machine learning algorithms biases against people of color. This speculative project explores how humans can gain control in a world where AI dominates decisions in our everyday lives. (Questions of transparency in decision making, filtered understanding of the world, and how people will choose to react)

1. Choosing your options

2. Choosing your transparency

3. Understanding your data (?)

4/4/21

Reflection on ‘Re-examining Whether, Why, and How Human-AI Interaction Is Uniquely Difficult to Design’

This paper articulated the difficulty behind designing with AI. I could fully relate to the challenge of injecting established UX design strategies into an AI product, as it is often unclear what it can be capable of (capability uncertainty) and what type of results it is actually able to give (output complexity). In light of my first project, even though I have created scenarios in which a conversational AI can mediate two people’s conversations… it is still unclear to me what type of services the AI would be able to offer, as well as how it would respond to a lot of false negatives/false positives that are inherent in an AI driven system.

The framework below seems helpful in understanding at large the types of AI systems designers would have to build for. The key seems to be that the designer can narrow their problem space by defining the types of interactions that are “probable”, as well as define the way the system would evolve/adapt with the user over time.

(Sidenote: the paper points out how rule based approaches are limited in capturing the essence of AI’s constantly evolving nature, but it is a quick and dirty way to prototype, especially for level 1/2 systems. From personal experience, I think designers suffer from a cognitive dissonance, where they know that the AI should be capable of so many things, but they are grasping for straws by solidifying the most basic of interactions through a rule based approach… simply because that is the most accessible way to design in the present day. I wonder how a design tool can respond to the generative nature of AI, so that designers can better expand their imagination of AI systems.

Applying the findings from the paper into my project… I think I’m interested in exploring the tangible ways that an AI system can present its dynamic, constantly evolving state. Software is quick to adapt to new changes, but can hardware be appropriately designed so that it can also be generative? Can a hardware be an AI-hardware, without needing to be replaced constantly over time, or being so reductionist that it is simply in the shape of a cylinder (aka Amazon Alexa)?

(^That is only one of many goals for the project though… since I’ve had a lot of stray thoughts of what a generative, kinetic sculpture could look like recently.)

Sketches, Thoughts, Research

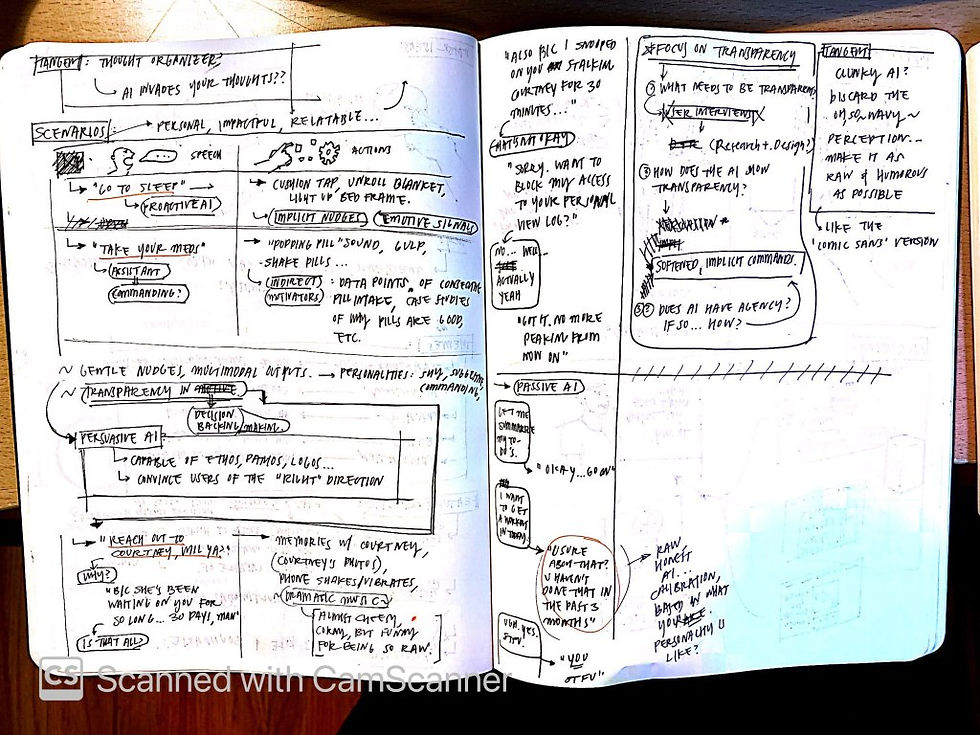

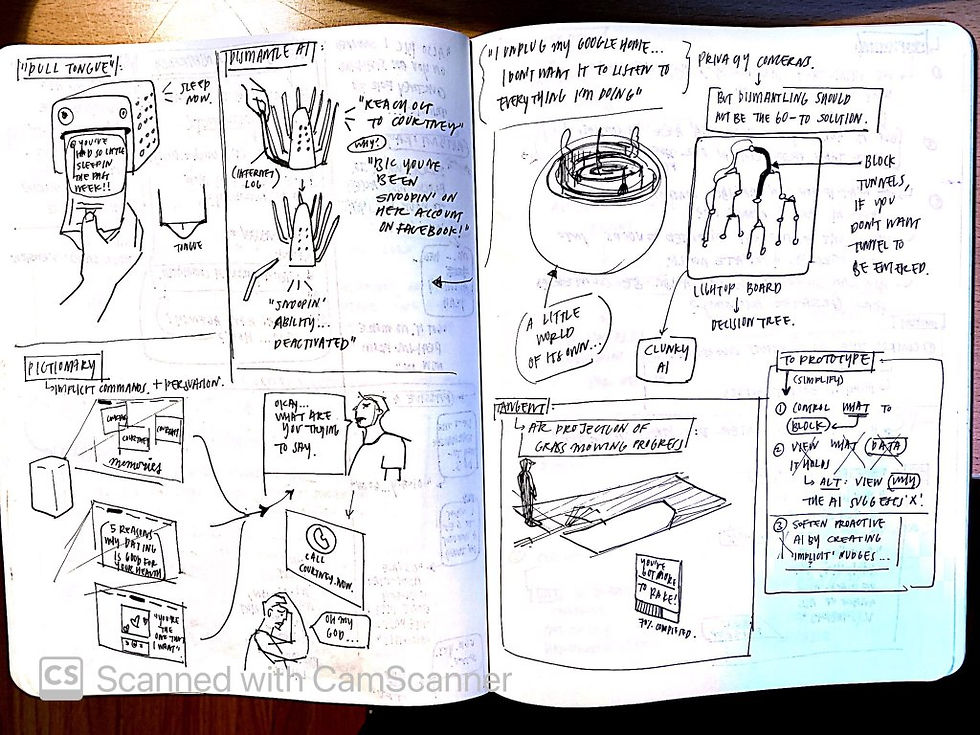

Sketches on intended qualities, conversations with a persuasive AI, user journey, and potential physical prototypes

Note: Pictionary is interesting to me… maybe more viable for another project though

Findings: Implicit/explicit nudges, autonomy over controls, transparency are all needed…

Trying to focus on customizable controls on an AI so that the creepy, surveillance capitalism-ness of current AI can be removed.

Can AI be under human control? Can you blind the AI? Can you learn more about what it’s thinking?

Note: I am focusing on a more anthropomorphic representation of AI, rather than AI as an embedded technology in designs. As a result, a lot of scenarios I have derived lean more towards the ~assistive AI~ that we have in the present day…

**Priority 1 is transparency and control. You choose whether your AI learns more or less about you.

**Priority 2 is fluidity of conversation. (Nod to cybernetics) There are many ~human~ ways that an AI can persuade you, or show its transparency. Emotions, gestures, multimodal things all play into the way it shares how much it knows about you. (Implicit nudges) (ex: “Ah man… fine. I’ll spit it out. I know that you’ve been looking at Emma’s profile picture for 45 minutes today! Want me to stop snooping?)

User Journey Currently:

(Level of engagement)

Look at AI –> (level 1 engagement)

Approach AI –> (level 2 engagement)

Touch AI –> (level 3 engagement)

^Transparency increases throughout. Nod to Google Soli sensors

Readings

ml models cannot be understood… decisions are not clear (if made on neural net)

training data bias, method of data selection, and bias in the algorithm itself are not made clear

no “bugs” from incorrect classification/regression – just bad data, or algorithm issues

path to transparent ai, one solution: google model cards – https://modelcards.withgoogle.com/about

Counter: how ai transparency can be counterintuitive in behavior..

https://arxiv.org/abs/1802.07810 – when ai decisions are made transparent, people were more likely to miss the errors due to information overload.

automation bias – people trust the ai’s decision, because it isn’t explained.

one way to resolve this conflict between transparency and usability is, put explanations in human language, rather than complicated machine language (a.k.a. design for the explanation behind a machine decision, rather than just the decision alone) (note: this could even be imagined where the signals are made through implicit nudges, like your bedframe flashing lights to encourage you to get some sleep)

in conclusion: ai transparency needs to be better designed to balance ethics and usability altogether

the transparency paradox: current efforts toward ai transparency

transparency paradox– algorithms more at risk for malicious efforts when ai becomes more transparent…

Lime AI – explaining predictions to any classifier

Outlining/defining ‘transparency‘

helpful guidelines… transparency not only to the user, but also to society at large (ex: transparency in employment changes due to ai)

Overall question then becomes: When is AI transparency necessary, and how can it be humanly explained?

When AI transparency is needed: Storyboards

Case Study: Surface Matters

This project is interesting for its unique use of materials to evoke a new experience. I want to delve into a novel form of tangible interaction in a similar way, where the interaction feels more human and welcoming.

methods of prototyping

wizard of oz- how does the ai feel, tangible interactions

prototyping with ai classifiers – lobe.ai, teachable machine

DtR – different behaviors/physical forms. which ai feels most trustworthy? when do we cross the threshold of creepy vs. helpful?

what is the best way to test the potential of an ai service…?

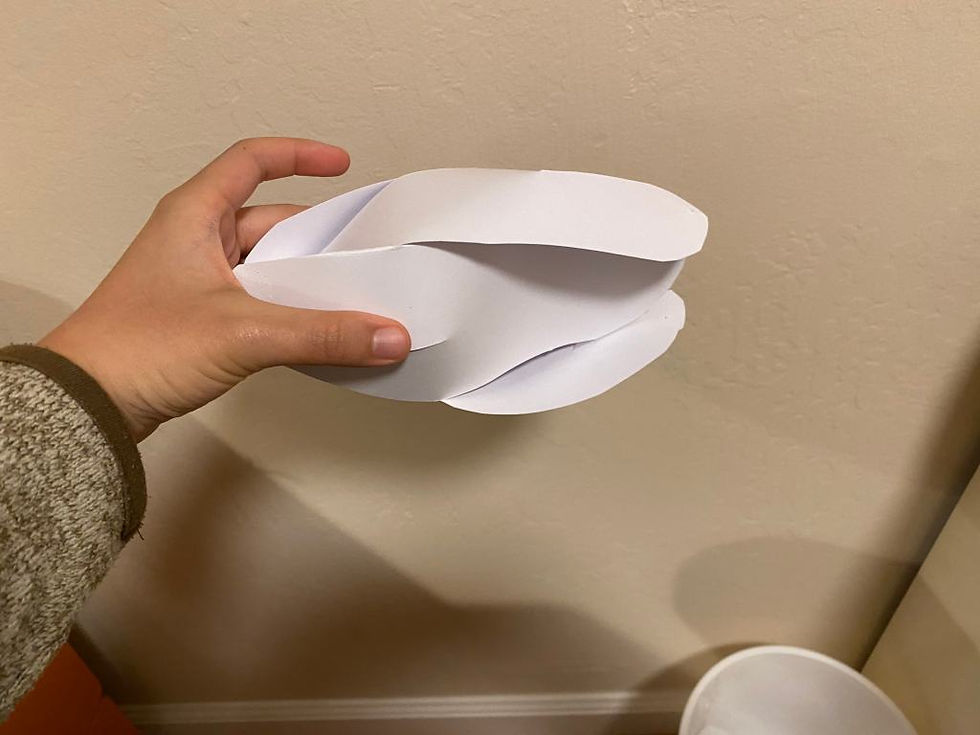

4/5: Physical Prototype 1

I made paper prototypes to illustrate the idea of transparency.

Initial iterations

State 0: Concealed

State 1: Present/Alive/Nudge to ‘openness’ and ‘transparency’???

Some version of linear actuator would be inside to push the scales outwards.

I like how the scales make the ‘device’ feel more alive. It’s almost like a living creature, expanding its ‘furs’ to speak with the user. The prototype inspires more use cases to consider. What does this novel interface allow?

State 2: Most Transparent?

State 3: “Looking around”

Inspirations for form

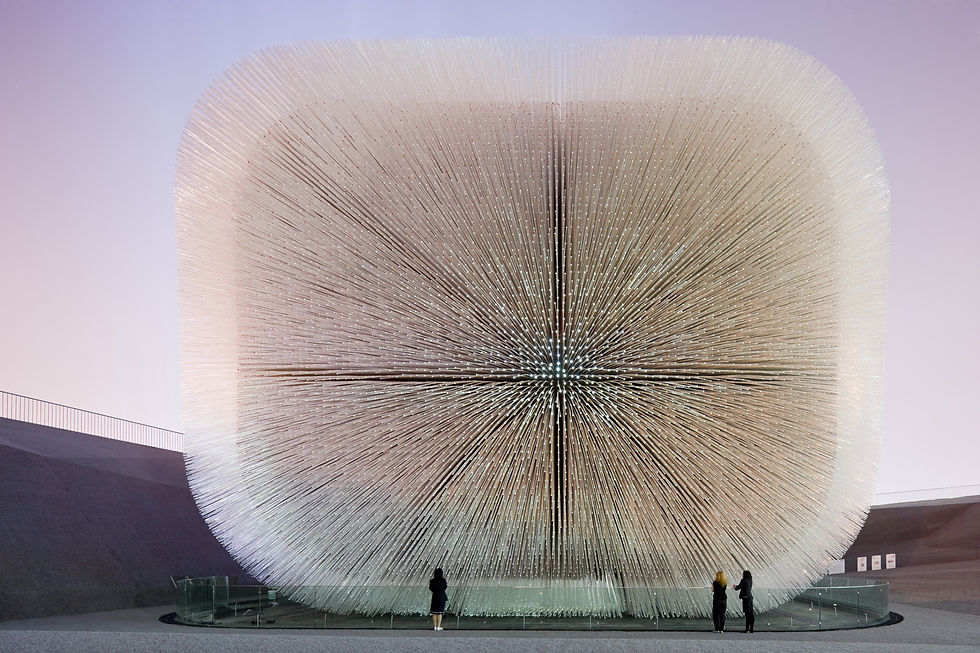

UK pavilion – Heatherwick

Julie and Julia

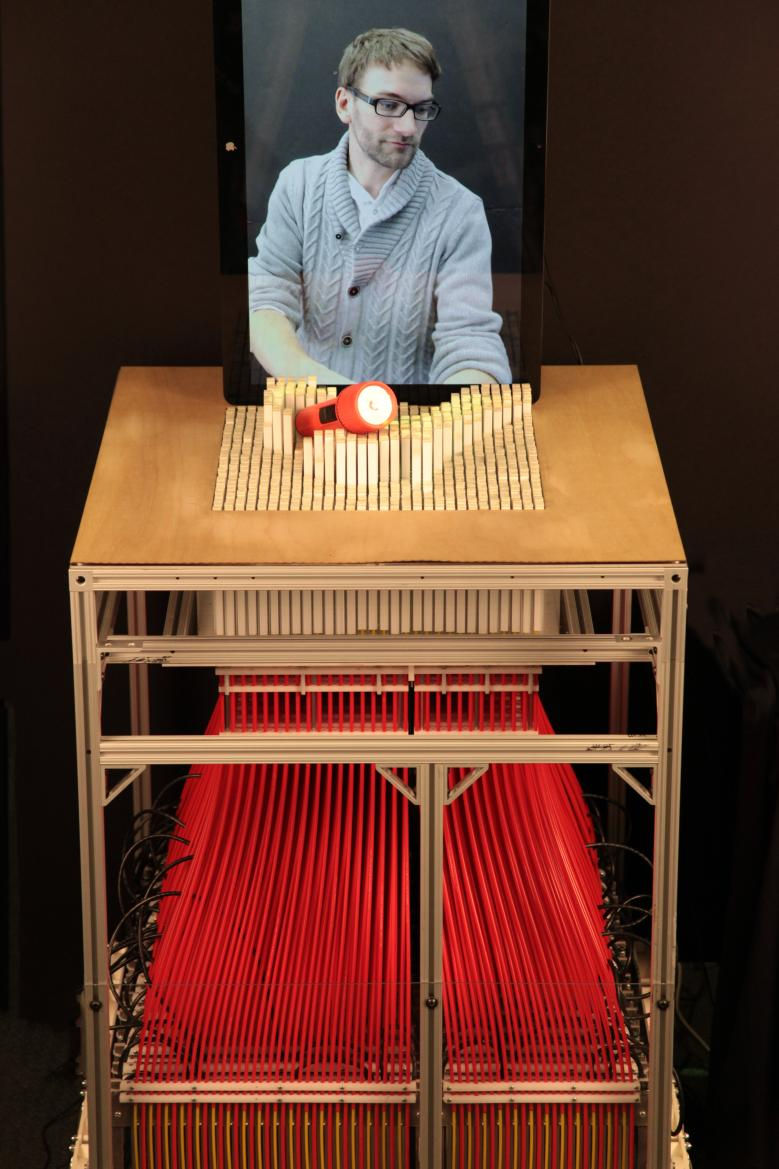

inFORM – Tangible Media Group

pinecone! (bio)

Printed paper actuator – Morphing Matters Lab

Potential inner workings

Feedback from Dina:

Try string mechanism, rather than linear servo

Find new case study that is more similar to my project (using kinetic movement?)

Reasoning for current form? Needs justification

The change in form can be layered — transparency of material, changing according to sunlight, etc.

4/7/21

Case Study: Water-reacting architectural surface

The project below is more similar to my project in its execution. Mine is more based on kinetic movement, rather than using a novel material, but I am inspired by their use of geometric patterns to further emphasize the notion of a “reveal.”

Other notable projects for servo mechanism (thanks to Dina):

I wasn’t able to find too much time to work on the prototype between Tuesday and Thursday, but I was able to implement this small change below.

The leaves can now open up when the string is pulled down. Time to try this out with a servo.

thought: maybe something appears underneath the petal when opened up? haha

4/8/21

Feedback from Dina:

What is the interaction? (ex: ask to play music from spotify, ai shows that spotify already retrieved your personal data, when i’m having a conversation with someone else, ai reveals that it’s recording) — speculative appliance

what triggers the device to be transparent?

String pulling on paper has issues of getting stuck –> dina: previous project used straws/3d model to smooth and create a channel for the moving string

portions of the ai opening is cool — two ways of execution: 1. multiple servos. 2. paper actuators

potential materials for paper actuator

example projects:

Excerpt from Active Matter by Skylar Tibbits

4/13 Reflection on Midpoint Presentation Feedback (Daragh and Brett)

Notes

Brett:

coldplay example – i wish it wasn’t so obvious

transparency use case – i wish was deeper or darker (needs to be more interesting)

i love the interaction of breathing and opening up

how much it breathes and opens up correlates to what the content is going to be

correlate to different scenarios

Daragh:

like it, plummage,

needs more richness on the information that’s being revealed

the object itself – what do you see underneath, what’s being invited in the view of what’s under the hood?

revealing the electronics?

makes me think, interaction that you can capitalize on

old work in public displays – interactive tvs – looks at different states of activation. far away: ambient. as you move through, there are four stages of engagement

opening thing up, and inviting someone to attend to it, engage with it.

move closer and invite to

progressive disclosure – glance, move in more and more

nice interactions around proximity, engagement, evolving the scenarios

Brett:

the paper petals – you could talk to mark to make it fit in a better way

inner skin that’s light or sound

as you got closer to it, maybe it tries not to share as much

Daragh:

echromic glass?

electric glass could be interesting aesthetic

if individual petal can’t be actuated

Reflection

Based on the midpoint presentation feedback, I want to focus on the following things:

Prototype layers of interaction (distance correlates to openness, light emitting from within)

Develop better scenarios related to AI transparency (“deep and dark,” rich information)

Consider what will be shown underneath –> research different mechanisms

Finalize prototype on 3d modeling software

4/16 Feedback from Shannon (Products POV)

I reached out to my friend Shannon in the P track to get some feedback on the form, as well as ask for advice for fabrication. Here’s what she said below:

petals are not subtle, personally feels a bit creepy

solid casing that encases the whole thing…

smaller flaps that attach to the solid casing…

so that flaps aren’t overlapping each other…

reminds me a bit of an armadillo

there’s no prescribed shape to it… looks like an egg

you can reference alexa

petals are too big, takes away from the form

revealing more of the form adn minimizing the petals would be better.

the movements would also be less obvious

if it’s stationary, any movement will be obvious

petals should be minimized, visually less in your face

Suggest: model various shapes of forms, through foam modeling

model different sizes/blocks of shapes…

ask for people’s opinions on what feels natural to them…

more sketching of form…

in consideration of the form, you have to consider how it will be made…

wooden frame…

Nitinol/Flexinol Wire Research

Tackling goal 3:

The current mechanism with the servo is a bit tacky, especially if I want to show what’s underneath the petals. I went back to looking at muscle wire (Nitinol/Flexinol wire) to see if it would be implementable with paper. It turned out that there were a lot of projects to learn/gain inspiration from.

How To: https://makezine.com/2012/01/31/skill-builder-working-with-shape-memory-alloy/

Origami instructions: http://highlowtech.org/?p=1448

I hesitated for a while about whether to buy the wire or not… I didn’t want to completely change the course of my project at this point in the process. Ultimately I ordered off Amazon, so that I can at least try out the mechanism to see if it would fit.

Detailed next steps

STRUCTURE MATERIAL

makerspace (MAKER NEXUS)- $150 for a month

currently paying $220 for technology…

if not, ill just cut out the parts myself on the thick craft paper i currently have

TO FABRICATE

laser cut structure

3d print servo holder?

cut petals

circuit for controlling wires (how?)

mapping 360 sensor to petals?

PETALS MATERIAL

settled on using paper — i want it to be somewhat malleable

sound of paper brushing up against each other is more pleasing

LIGHT

light inside (smart lamp + speaker?)

revolves depending on where you are

WHAT’S INSIDE?

soft light (speaker/fabric material)

ADDED LAYER OF INTERACTION

swipe to clean

swipe to hear more options (multiple options —> embedded in the interaction)

4/20

Dina Feedback

Test out one petal for nitinol

3d print base, edge of petal

scale – smaller scale? what’s the interaction? what’s underneath?

what’s inside? (ex: open up when having convo with boyfriend –> shows that ai is listening. option to closer for important convo)

define transparency, type of data

doesn’t have to fix everything, just one specific probleem

saving convo and sending to cloud is… not a conspiracy theory

Look to this article: https://www.wired.com/insights/2015/03/internet-things-data-go/?redirectURL=https%3A%2F%2Fwww.wired.com%2Finsights%2F2015%2F03%2Finternet-things-data-go%2F

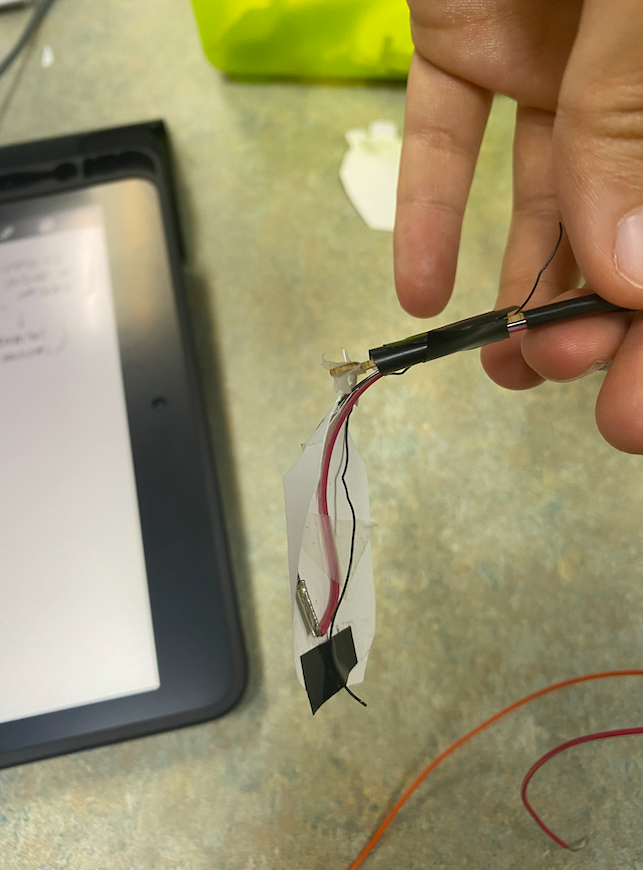

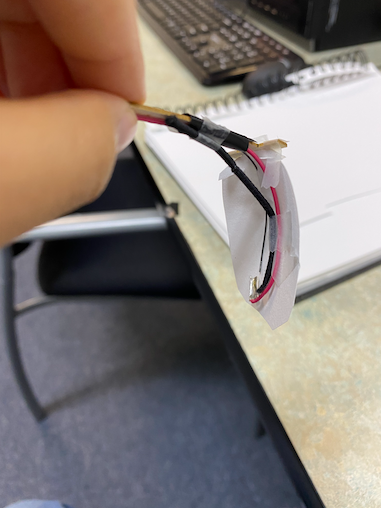

Nitinol Experiments

This was the most successful attempt. Adjustments needed to be made in the way the nitinol wire is heated (torch is most effective, with wires coiled on a steel rod), as well as the amount of voltage to actuate it (4.5v was most effective for the ~5 inch wire I cut out. 3v was too slow, and 12v seems to be too heavy.)

There is a way to calculate how much current should go through the wire to actuate it, but I haven’t used it yet. It’s available on the manufacturer’s website: https://www.kelloggsresearchlabs.com/nitinol-faq/ (question 1.6)

Actuation sped up two times

The main problem with the above experiment was that the wire fails to bounce back successfully once the current is no longer flowing. Watching the following video made me realize that an opposing force needs to be applied so that the wire can straighten itself out more quickly:

Another issue was the weight of the alligator clips. It seems to hinder the performance of how much the wire bends. As such, I decided to use aluminum tape I (fortunately) had around at home. (No copper tape was available but it does the job well.)

Since multiple petals need to be actuated, I looked into how I can control each output effectively on Arduino. A shift register like the one below seems to be a good solution to acquire as many digital outputs.

Shaping the wire. The shape doesn’t quite stay in place, even if secured with strong tape

To execute the “opening up petals,” it took many tries to get the right movement… I realized through the process that aluminum tape failed to conduct any electricity.

Successful attempts:

Note: Apple made an update in their 4/20 event of protecting your privacy when interacting with apps… I wonder how they landed on the design decision to show different types of data that are being tracked. How do people decide what is too much intrusion and what is not?

Makerspace visit – got lasercut training for 2 hours

4/22 – 4/26: Snapshots of progress

Final petal opening test on old model. Stabilized by holding down wire with tape on structure

better movement.

Lasercutting diagrams

Head wrecking process… on Illustrator

Lasercutting on 45w Helix. Used a pulp board i had at home

First draft. Weak joints, unstable petal holder. Needs refinement.

4.5w burnt the wire.

Soldering

Petal open

Nice open. Would be better if opens more.

Counter-force to pull petal back down???

Magnet to snap the petal back in place?

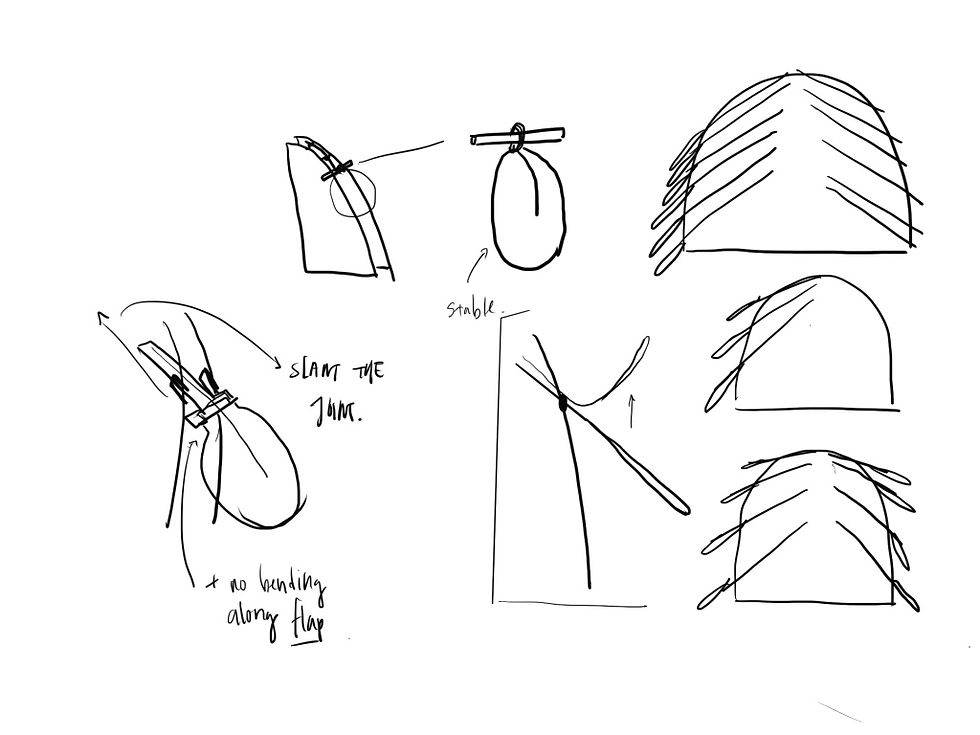

Thought of slanting the joints, but abandoned

Tried spring wire, based on inchworm video. Did not work

Hair tie (rubber band) attempt. Also did not work

What to do, what to do…

4/27 Feedback from Daniel + Dina

Daniel:

types of data could correlate to certain colors

the object could be beautiful as a tangible object

petals could be made of fabric?

mylar paper…

fabric: below,

leds?

pet – opens, and below are different colors with fabric, a whole map of different data types…

when skin opens, reveals the colors

combination of different data types

not a piece of technology

a plushy toy…

wire – need to use a transistor…

arduino

voltage supplier is providing a lot of current

fabric: if painted with chromatic paint

heat could change the color…

arduino can only provide 130 miligrams

transistor will work as a current

current will go to the power supply rather tahn to the arduino

one or two petals prototyped is good, sketches are beautiful

3d model the petals

i wonder how are you thinking about tapping into the data?

may need to do more research, show a diagram… how will the whole system work?

facebook: ads, yesterday you were looking at this

explore what will be the way it integrates with social media

data categories – make them up by yourself, according to your experience

white or black skin,

below the skin, all the colors pop up inside

the color = data typ

Dina:

one part could be moveable, but attach the petals for everything…

show human figures in systems diagram

fabric or mylar

thermochromatic pigment

check felt project from before: http://www.feleciadavistudio.com/

Reflection on feedback

Will try to categorize by personal data on each leaf node… Need to decide how broad each category is, as well as how to show/map it. (Maybe an accompanying screen to detail the findings? like a 3d rendering of a human body with explanations attached?)

Want to try the thermochromatic pigment, but rather than just showing differentiation through color, I might try creating different “states” through differing movements of the petals

Transistor suggestion is helpful – will try out with arduino

Tapping into the data on the backend? API? Might check out. Seems like a detailed design step….

Prototyping just some petal movement is good, but need to fabricate all as suggested by dina

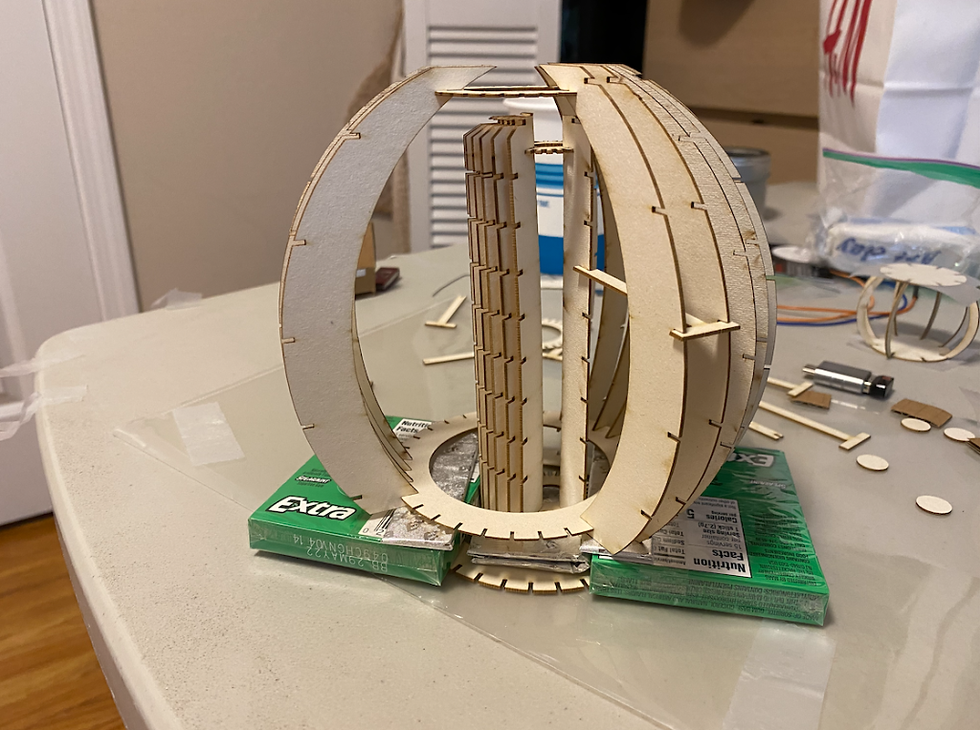

4/28 Better inner structure

Deeper joints for stable structure

Calculated width and depth of petal holders – needed to be “sliced” depending on diameter of sphere. Manual work on Illustrator… but ended up working out pretty great.

Ended up not needing the inner vertical poles to secure the petal holders. Interesting that new, deep joints were able to secure the structure very well.

4/29 Next steps

Fabrication next steps:

Old notes on transistors from physcomp..

Interaction next steps:

types of data to show through petals (color + movement)

types of inputs (gestures, speech)

storyboard

Found a super helpful page: https://www.kobakant.at/DIY/?p=7981.

Nitinol wire use cases below:

Comments