Audio-Based Interactions | Rachel Legg, Se A Kim, Michael Kim

- rlegg1

- Mar 31, 2022

- 27 min read

Updated: May 10, 2022

Project: "multisensory interactions, visual and haptic inputs and outputs have been mainly used while spatial audio has been underutilized. How do we leverage spatial audio in augmenting our environments? How can we use sound as a main channel of our interactions with others, with objects and environments?"

Group: Rachel Legg, Se A Kim, Michael Kim

3/30/2022

Thoughts of readings:

"Towards a Framework for Designing Playful Gustosonic Experiences"

iScream and WeScream both create a more playful relationship with food. It uses celebratory technology with aspects of eating rather than corrective ones, fostering positive relationship with food. And eating behaviors themselves can be influenced by sound as people want to experiment with eating faster/slower, more licking/less licking, or the amount of ice cream. What we hear while eating affects enjoyment of food and impact overall experience! It is important and fun to see how senses inform each other for the overall experience. WeScream also introduces a social dynamic element where people can create flows of sounds together and facilitate more face-to-face interaction that is playful.

For the reading on “Towards a Framework for Designing Playful Gustosonic Experiences”, I quite enjoyed reading about the “iScream” project and its familiar design. Having designed a system around something that already comes naturally to most people, it is simple for a commoner to pick up the project and understand what to do - just lick the ice cream. It’s playful in that licking the ice cream in different ways may result to different sounds/instrument version playing. For our own project, I think we should keep this similar playful nature and let the user play around with different ways to interact with whatever we make.

"Toward Understanding Playful Beverage-based Gustosonic Experiences"

Sonic Straws also highlights the joy of multi-sensory experiences by focusing on drinking. Drinking happens everyday, and this again uses celebratory technology to celebrate this experience that happens so often. It interesting to consider how sound can impact how we perceive flavor and texture. By incorporating playful, personalized sounds when drinking through a straw, this changes perception of the activity and encourages people to engage all their senses. People like to be able to set their owns sounds which makes the straws even more fun as they change from day to day with your beverage. This creates self expression and allows opportunity for relaxation or shaping your own experience and state of mind. This project amplifies entertainment and joy and all allows people to creates melodies together. The creators encourage designers to look at how you can gear experiences like this to personal taste and preferences, along with amplifying sound to better make these shared experiences. This area of connecting sound to action sis an open-ended opportunity that can heighten an experience, while engaging multiple senses!

I found the reading on Sonic Straws to be quite interesting. The researchers/designers figured out a way of incorporating a new sense into the action of drinking - something we have made commonplace having to drink water, beverages, etc many times throughout the day. I will be honest however - it’s hard for me to reason a practical use for this project and the question of “would people actually use this” was a question that kept popping up in my head. It’s obvious that the group put a lot of thought and effort into it, even anticipating some play elements, but I’m curious to see if our design projects will relate in the same way of not necessarily having a practical use vs. making something that people can incorporate into their daily living styles.

Initial Possible Directions

We met and brainstormed some directions for padding's sound to experiences. This included uncovering the thoughts of plants, the relationship of sound to color, the relationship of sound to emotion, or maybe sound when you work out.

Individual Explorations of ideas, precedents & storyboarding:

Rachel was pretty interested in how color could connect to sound and how this could be personalized from person to person.

Some precedents for color and music:

“Hear the Rainbow” - sounds of colors through data and sensory perception!

----

“MetaSynth” - original sound to color synthesizer (sounds made of pixels from images)

“AudioPaint” - program translates each pixels postiion and color into sounds

this turns pictures into music!

-we actually often are interacting with color and sound simultaneously w/o

realizing it

-what if we showed the connection within an environment?

-----

Siana Altiise has synesthesia leading to music having connection to colors and emotions

----

Sound Color Project

This explores the relationship between audio and visual spectrums.

------

“Colors of the Piano”

This allows you to see the change in color based on keys of piano (length of note, tone..)

Rachel's idea is there is a mirror that you can approach and it seems to measure you, before showing a color palette along the side panel that coincides with your outfit. This color palette would trigger the layering of sounds based on the colors. The person could click on a color to isolate the sound of that specific color or hear them all together. This could have a social element, too as you can expand the color palette with multiple people and have fun experimenting with sounds to color. This creates a fun, personalized experience where it can change day to day based on what you are wearing. Maybe you try to make harmonious music or lots of sound? Maybe this will change people's behavior to be more adventurous with the color they wear? Or make people think more about the connection between color and sound?

Michael was interested in a concept that could use Spotify API based around the concept of recorded behaviors. For common activities like lifting, cooking, and taking a shower, sounds could be recorded to determine an action, reinforce through learning, and then music could be generated for those specific actions. This concept was loosely inspired by machine learning methods discovered in another class called "Demystifying AI", and around a concept from CMU's very own Futures Interaction Group:

As a quick interaction storyboard, Michael made a quick UI mockup as it would make more sense to display an app than storyboard. From the first screen, you can see a user could input their specific activity, and then in the next screen a playlist would be created for their Spotify user profile.

Additional precedents:

https://docs.google.com/presentation/d/1k DxSv9IGwKL3XBnsNh_6P-MvuOFNkXuWfKNGh0rpKDA/edit#slide=id.g120ca65627a_1_158

Se A was intrigued by how plants can have their own voice and communicate with their surroundings. A precedent study that revolves around this subject is PlantWave, which is a device that allows users to listen to plants sing.

When the sensors are attached to the plants, PlantWave can detect the electrical variations from the plant and translate it to different pitches that are played from musical instruments. Additional characteristics include adding texture quality to the sounds and connectivity to music applications like a Midi Synthesizer. Se A found this project effective not only through its interactive design but also through its multifunctional use cases.

From this initial precedent study, Se A storyboarded a device in which people can listen to a plants conversation and better understand the organic network that is around us. In the storyboard below, Jim takes out his nature listening device and hears voices coming from trees, flowers, and the ground. He can hear different voices and personalities and even learns about the mycelium root system underground. Jim comes out of this experience with a stronger relationship with nature and feels closer to his environment.

3/31/2022

The three of us met and discussed each storyboard. We decided to go with the colors of an outfit to music direction. As we discussed this idea, we talked about what if the colors in your outfit created a color palette that then connected to a song on Spotify so each day you would get a song of the day based on your outfit that could then build up a playlist over time (so maybe their are 31 song from the month of March that each represent a different outfit). We thought that the mirror would sample colors from outfit, generate gradient from outfit, determine song attributes from gradient colors, and then output song of the day. Overtime, this would generate playlist for “Colors of Month”

This would be fun to explore how people express themselves through their clothes and how they could continue to discover music through exploring color combinations in their clothing. We also discussed how much control we would give the user. Would the user be able to change the settings so the songs would be based on their saved songs or specific genres or would you keep it open to all songs to really open up discovery? There are a lot of very interesting aspects of this idea in regards to self-expression and discovery that we can use to make a fun experience for people that changes day-to-day.

We also discussed what if the user could do a deeper analysis to see the connections between certain colors int he gradient and certain aspects of the song like the tone is connected to this color, etc. Or what if someone always wears the same color, how could this interface give suggestions to adding a pop of color to get different results. There is a lot of variability between both color and music. Either way, we hope this experience can foster a sense of discovery!

"Behr Music in Color"

For our precedent exploration, we chose to look at the "Behr Music in Color" website. It is a collaboration between Katy Perry, Behr paints, and Spotify to match songs with color. The user inputs a song of their choice and the website generator then analyzes the song (based on tempo, key, and acoustics) and pairs it with a color from Katy Perry's exclusive color palette with Behr. Using Spodify's extensive music library (70 million songs) and streaming intelligence, people are able to "discover personalized paint recommendations" based on their favorite songs. It is very fun and engaging, with a personalization aspect. It intertwines our visual and audio senses, engaging the user in many ways. This audio precedent experience explores the interaction between selecting a unique song and generating a live visual for it using colors generated from the song’s unique attributes like mood, tempo, key, and genre.

“Discovery is one of the top reasons that keeps listeners coming to Spotify and we’re keeping that spirit alive, taking them on a personalized journey that will spark joy as only music and colour can.”

Updated Storyboard Concept

Experience Layout

Begins with user wearing outfit that is fed into camera. The system samples colors form camera that leads to the system creating a gradient, along with building song characteristics from the sampled colors. The color gradient is displayed and a song is selected (either from user's recently played or similar genres or completely random). The song is then added to the user's outfit playlist.

Experience Components

Early Iterating on Prototypes

To see if what we were building was feasible from a time constraint and technical standpoint, we decided to try building a low fidelity concept of the camera to gradient using WebGL. Working in WebGL, this would give us access to custom javascript for Spotify's widely available API and live connections to parameters in GLSL shaders.

04/05/2022-4/07/2022

We further discussed connecting color to song.

From Spotify, it can analyze your top 50 songs and 50 most recent songs, equaling 100 songs. While we hope this could reach all song, we thought for the demo this would be a good starting point to set a limit on song the program would have to pick from. From this break down we can base colors on energy, loudness, dancability, acoustics and other themes that spodify categorizes.

The outfit would be broken down into 3 colors. The program would sor out what songs don’t match up, leaving a few option that the program would then choose from. For example, if one color has a certain amount of darkness, this could narrow down to only 25 song and so on... until the computer can randomize from a low amount of songs.

After this initial discussion of approach, we wanted to declare a question to help guide our progress.

How might we incorporate sound to enhance the experience of getting ready in the morning?

Getting ready happens everyday. Incorporating sound then connects to putting on outfits, action of looking at the mirror, expanding on an everyday experiences, and a new way of relating with a song. Overall, making an everyday activity for exciting nd something to look forward to.

We then decided to lay out the sequence of events based on the storyboard:

We next wanted to consider how to make this experience more interactive with user... instead of a mirror just spitting out a song.

Possible Features of Interface:

-matching colors of outfit to song

-adding color to change song

-song gives suggestion of colors to build outfit from

Following this we discussed it it would be its own app or website that holds all of these features or would it be a program paired with Spotify.

The biggest barrier that we seem to be hitting is how to connect color to songs. We need to define the connection between song and color that can then allow this to actually work.

We did some research on what other things have done in the past.

This device can turn any color into any sound: https://www.youtube.com/watch?v=2avOFF6Xl4w

This device makes a sound when it comes in contact with color. The user codes what color creates what sound, creating a fun interactive experience between the user and the colors of their space.

Roy G Biv: https://www.fastcompany.com/3033213/turn-color-into-sound-with-this-this-synesthesia-synthesizer-app

This app uses color to create music.

"All colors can be uniquely identified by their hue, saturation, and brightness. By translating these values into musical variables like waveform, oscillation, and attack/decay time, the app can determine the sound of the notes you play on the app’s keyboard."

Making music with Nsynth: https://www.youtube.com/watch?v=0fjopD87pyw

-Hector Plimmer, explores new sounds generated by the NSynth machine learning algorithm - using NSynth Super an open source experimental instrument.

Musical Colors: https://www.yankodesign.com/2012/07/20/musical-colors/

"The size of the colors influences the volume and frequency of the notes played.

Color detection and sound generation were created and are controlled using Processing code.

The system of audible color is based on a marriage between basic color and music theories.

The colors of red, blue, and green are the visual foundation for color-mixing and the music notes A, D, and F are the base triad that corresponds to the colors.

The secondary colors (colors made when the foundational three are mixed) of purple, teal and brown are tuned to the musical triad C, E and G.

The visual of the mixing of red, blue and/or green mirrors the aural output of combined notes.

The ‘painting’ aspect is not restricted to water droplets from a pipette.

Numerous experiments were performed using substances such as acrylic paint, food dye in milk with soap, and ordinary household objects.

Each investigation created a new type of fun and easy gestural music making."

Moving forward from research, we need to define how colors then translate to songs. We also need to define the audio experience (are the songs when you approach the mirror? How do we envelop the user in the audio experience, rather than just from your phone?).

We made a rough set up of what if black to white was deeper tone to lighter tone and then possible blue to red was less to more energy... We decided these relationships needed to be explored further to be decided and talked about possibly breaking it down by RGB spectrums and connecting them to certain aspects in music to help define songs to colors to outfits.

4/10/2022-4/11/2022

We continued to ask questions to fully flesh out this concept, along with trying to define the color spectrums.

As of right now, the black to white spectrum marks tone. Then, green in connected to danceability, blue to frequency/waveform, and red to energy.

Dina also checked in to make sure we prioritizing the audio and its role in the MR experience. This led to questions and figuring out how we sound will function in the experience so that it is more than just a song playing on your phone, but part of the whole experience.

***Defining sounds role in MR experience

Is there sound when person approaches mirror (to intially engage and hold the space before a song is chosen)?

Are these sounds just sound effects or music/songs?

Does sound come from mirror/tablet or surrounding speakers?

How do we emphasize the audio element in relation to the visual mirror?

The Context: Getting Ready for the day is a daily ritual... what if we could make it more fun and something to look forward to using audio? Connecting color palette of outfit to songs engages user and allows discovery in both the colors they wear and finding new music.

Another thought...

What if there is a social element as well? A user could see other’s color palettes/song of the day and have the ability to discover music by seeing friends’ color combinations and how they led to certain songs. This creates something to talk about (compare/contrast).

4/12/2022

We met and first talked about the XR interaction and color spectrums.

Michael thought about what if we used audio as cues instead of screen/click interactions. For example: clap to start 3 second timer to match colors. He also brought up live audio aura generated through colors that the camera tracks so that there is an audio reaction to person approaching camera/mirror. ie. Pump higher tempo audio when there is more green to indicate/hint at the idea that this color will show a faster paced song and lighter tones will change the frequency of audio wave. (https://musiclab.chromeexperiments.com/Oscillators/)

The interaction then sort of becomes a conversation like the musicolour audio example shown as an example of cybernetics. (https://www.historyofinformation.com/detail.php?id=2013)

For the color spectrums, we decided to lay out all the elements available in the Spotify API. We switched the black-white spectrum to tempo as we felt lighter faster tempos would coincide with a lighter color versus slower tempos would coincide with a darker color. We switched blue to valence to match up with the feeling given off by the song. We kept red with energy and green with danceability.

We then made a to-do list. We needed to put together the systems map, journey map, create a prototype with javascript implementation of Spotify API requests and final song output, and create the live demo with color indication (RGB val in corner). We also discussed adding a live audio wave to add to prototype.

First, we made the systems diagram. The components of the experience include the camera/mirror, computer, LED lights, and speaker that all react to the presence/outfit of the user. These then connect to the Spotify API. The song chosen would be displayed on the screen, along with being added to the Spotify playlist which is accessible from any smart device logged into the user's Spotify account.

Then, we made the user journey map. We chose for the focus audience to be students (students often have a morning ritual of getting ready and then listen to music throughout the day). The student wakes up in the morning and composes an outfit. They walk up to the mirror to prompt ColorSynth. Student reacts to visual color palette and audio feedback. Student confirms outfit and selection fro ColorSynth. Student sees the song match displayed. Student walks to class and puts on their monthly outfit color playlist. Finally, student is able to share the outfit and song with others on Spotify, along with seeing others color palettes and songs of the day. Our journey map shows this story along with the engagement of the user and the design opportunities in each segment.

After finishing these two maps, we talked about how to move forward. Michael was going to work on the Javascript with the Spotify API. Se A and Rachel were going to look into TouchDesigner and how we could make the ColorSynth response to the user approaching the mirror with audio.

4/13/2022

Updated Prototype of Colors Your Wearing:

Although there weren't any much visual changes to the color matching prototype, we began taking a look at Spotify's API to see what oculd be done to transfer those gradients colors into information that could be used to generate a unique API call for a specific outfit.

We ended up choosing this API call:

Based around this call, we could feed specific inputs like target danceability, valence, energy, tempo, etc to get more specific results, and then specify a specific number of results for the logic part of the demo. Although we had not figured out how to get these colors into Spotify's song classification metrics yet, the goal would be to take colors like pink or blue and give them values.

Above is the first concept of the workflow described. Earlier in the code, values like tempo and valence can be written in as global "window" variables in javascript using another function, and then be used in the getUserRecom function to specify an API call to return for the user.

4/14/2022

The three of us met with Elizabeth to talk through our concept, and she asked a lot of great questions. First, she felt we could simplify our systems diagram as there are a lot of extra components that might not necessarily be needed. She thought this could all be portrayed on a smart phone, and we should think more about the physical interaction flow. She thought we should think more about the transition from color to music and how much confirmation the program needs to make the interaction smoother for the user.

She asked why we chose outfits as outfits/wardrobes not only have variability in color, but also style and how are those differences accounted for. She brought up a really good point of maybe changing the scope to colors of contexts and allowing people to experience this either by building their color palettes based on surroundings or maybe adding a specific context (like a clothing store for a retail experience to be more engaging). This shifted our thoughts to focusing on the mood and activities the user is engaging in so maybe it is less about the outfit and more about the relationship between color and song. By widening this, it also would rely more on the use of a phone and less on a mirror that can't move, making it more interactive.

She also shared some example projects like this "Painted Earth" projects that paints as you walk on your phone, creating cool pieces of art from exploration of environment. While this has less to do with audio, it made us think about what if someone could collect colors of their environment which could translate into a song/music. Maybe you could build a playlist based on the places you have been. These are just some thoughts.

Based on our conversation, we decided to revisit our concept and possibly simplify to give us a solid direction to move forward.

4/15/2022

We met to discuss Elizabeth's feedback and how we want to move forward.

First, we needed to narrow down reasoning for outfit/colors -> song (Is there a specific reason to hone in on outfit?). Clothes bring up a lot of different variable in regards to fashion and style so how can we narrow down the experience on color!? We started thinking possibly shifting our approach but keep with idea of morning routine as project pin point. Instead of a focus on clothes, how can we focus in on the specific way colors are mapped to emotion/characteristics of songs? So narrowing down this experience to look more at the relationship between color, mood, and music as a reflective tool for an individual in their morning routine. This connection allows someone to decide on how they are feeling, upload the colors they are wearing, and decide if the song fits to start off their day and begin building a morning playlist. This application would connect to the mirror, leading to a visual-auditory experience of the song that weaves in the color and emotion the user input.

In regards to our thoughts for the application:

This could starts with initial training using where user inputs from survey: “How do you feel wearing this color?”

As user then wears that color, audio-based interaction will prompt discussion like “it seems like you’re ____ wearing this color today”

We visualized the sequence being like: user takes initial survey (1 time thing), user gets ready with outfit color, ColorSynth responds and says “You seem ____ in this outfit today! Let’s look for a _____ song”, user then can confirm or reject to further change the dataset ColorSynth to better match with the user, ColorSynth then outputs color of day and outputs to spotify.

Focus on Morning Routine and Self Reflection

We see an opportunity to for this experience to be a part of the morning routine and in a way help the user reflect on themselves and how they feel in the morning that can then connect to color choice and reflect this sense of self this in their music - growing a stronger to themselves and self expression. The connection to mirror emphasizes self and incorporates this experience into a personal space where people can think/reflect/prepare for the day.

Elizabeth also suggested narrowing down the system diagram objects (as it seems like there are a lot of components that could be simplified.) We decided this made sense so we omitted the LED lights and chose to focus on the use of a smart device and how that would then connect to the speaker and mirror.

For over the weekend, we need to continue toward demo (Convert list of 10 recommended songs to 100?, add input colors, cull down songs using color data, select final song, add song to test playlist) and then wireframe of how the application would look w/ questions.

4/16/2022

Wireframing

Se A and Rachel met to discuss what the sequence on the application could be like.

First, it would prompt to get started and ask for the users name to create an initial connection to then lead into some questions for how the program can start making connection between color and emotion.

Next, the user would be asked what colors they wear often and how they feel wearing blue, red, and green. We weren't sure if there should be a black and white section, too.

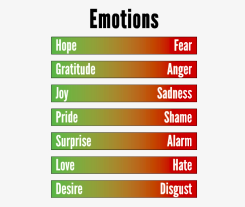

We decided to pick three spectrums for each question: happy to sad, energetic to calm, and hopeful to dark to help assess the emotion connected to that color and how that can better help us connect music. We originally had many different emotion buttons but that seemed more difficult to sift through so we wanted to narrow down on three types of emotion spectrums (many emotions also have overlapping characteristics so trying to pick emotions that have pretty distinct differences).

Next, the application wants to assess the day by asking the user to import the colors they are wearing (picture or maybe have a color spectrum to pick?). Then, they pick how they are feeling and the application would give feedback before "calculating".

A song will appear along with an option to confirm it fits or not quite right in which the user can retry.

After this initial interaction then the following experiences will only assess based on the colors the user is wearing and how they are feeling that particular morning.

We need to refine, but this creates a foundation.

Michael also continued to work on the Spotify API in having 100 songs and how it is narrowed down based on decisions.

4/18/2022

So instead of the colors being determined by our preset choice of green is danceability and so on, the color now depends on the user's responses, building a relationship of color, emotion, and music based on their preferences.

Below is an example of how this could look based on their responses that would determine a song.

From app to song calculation and auditory/visual feedback on mirror:

Updated Storyboard:

***We also decided that there would be auditory feedback from mirror as someone answers the questions that creates sounds that build on each other with each question which ends up matching with the song that is chosen based on the their color and emotion choices. This leads to continuous feedback and more a relationship with the mirror in their space.

Figma application phone prototype: https://www.figma.com/proto/wjY83C0Y0XbVj1bdRTiXh7/Audio-based-Interaction?node-id=117%3A678&scaling=scale-down&page-id=114%3A473&starting-point-node-id=117%3A678

Animation Examples for color animation for mirror:

4/19/2022

Further Discussion and Progress

As we continued to think about this project, we revisited all the things available on Spotify and decided on these 5 to include:

Danceability: Describes how suitable a track is for dancing based on a combination of musical elements including tempo, rhythm stability, beat strength, and overall regularity. A value of 0.0 is least danceable and 1.0 is most danceable.

Energy: Energy is a measure from 0.0 to 1.0 and represents a perceptual measure of intensity and activity. Typically, energetic tracks feel fast, loud, and noisy.

Speechiness: Speechiness detects the presence of spoken words in a track. The more exclusively speech-like the recording (e.g. talk show, audio book, poetry), the closer to 1.0 the attribute value. Values above 0.66 describe tracks that are probably made entirely of spoken words. Values between 0.33 and 0.66 describe tracks that may contain both music and speech, either in sections or layered, including such cases as rap music. Values below 0.33 most likely represent music and other non-speech-like tracks.

Tempo: The overall estimated tempo of a track in beats per minute (BPM). In musical terminology, tempo is the speed or pace of a given piece and derives directly from the average beat duration.

Valence: A measure from 0.0 to 1.0 describing the musical positiveness conveyed by a track. Tracks with high valence sound more positive (e.g. happy, cheerful, euphoric), while tracks with low valence sound more negative (e.g. sad, depressed, angry).

Then based on these, we started writing possible questions for the app that could help determine the emotion associated with color for selecting song:

Danceability

The color ____ makes me wanna get up and move

_____ is a color that feels like dancing

I’m ready to move in the color _________

The color _____ reminds me to dance like no one’s watching

Energy

I characterize ______ with being energetic

______ is a color that represents a lowkey energy

The color ____ makes me feel energized

I’ll feel ready to take on the day in the color _____

(Danceability + Energy can have shared questions)

Speechiness

To me, ______ feels like a loud color

The color ______ feels quiet compared to other colors

I feel extroverted in the color ______

I enjoy wearing the color ______ in public a lot

Tempo

The color ______ makes me feel upbeat

I feel calm and slow when I wear ______

Valence

The color ______ makes me feel happy

I feel sad when I wear _______

We chose to focus on these 5 colors below for the sake of the demo. We further defined the mirror's role as having auditory feedback as someone interacts with the app that then leads t the song after it calculates. We need to figure out how to generate audio based on the answers to questions. We also have to create a visual animation that would align both with the audio feedback and the song itself.

We talked about this with Dina as we felt a little overwhelmed with these three things happening all at once in between the phone and mirror. She suggested maybe showing this all on a TV screen so people interact with the screen while also having a "mirror" with the visuals for the demo so it would be all in one place.

Moving forward: Se A was going to work on understanding Faust which essentially builds an instrument to then connect to TouchDesigner. Rachel was going to work on how to build a visual animation for the mirror that could link up with the Faust and visualize the sound into color/shapes. Michael was going to continue to work on the Spotify API.

4/21/2022

Rachel worked on creating an audio reactive animation on touch designer.

The first video shows the animation that reacts to the LFO, while the second one adds the video from the comupter camera, simulating a "mirror" with the animation overplayed.

Se A continued to research Faust and discovered Flutter as a possible avenue for generating sound.

Michael continued to work on the Spotify API.

At this point, Michael figured out the playlist addition implementation to the Spotify demo, allowing people using the demo to add recommendations to a new playlist (labelled "API" as just a test).

This process was done by taking the 20 genereated suggested songs from the recommendation call, choosing a random integer between 0-20, and then submitting an additional Spotify API call to add that integer's array index to a specified playlist (in this case marked through the title named "API). For the sake of the demo, this feature was turned off so there wouldn't be random "API" playlists that would be generated under Michael's account.

.

4/24/2022

From the previous questions we had generated that correlated with the Spotify themes, Rachel updated the figma prototype so that the questions would help create a scale of how the colors match to the different Spotify themes.

Se A made an audio visualizer on touch designer. The audio visualizer reacts to bass, claps, and kicks.

We made sure that each color had a questions for danceability, energy, speechiness, tempo, valence. We chose to keep it narrowed down to red, green, blue, and black.

4/25/2022

Michael began working on the quiz with the prototype. At this point, he took a break from the API portion of the demo and took time to focus on building the side quiz using HTML and CSS over the existing WebGL framework. Using the figma screens that had been previously created, he generated each screen using classic CSS styling and additional hover animations to add more depth to the demo.

4/26/2022 - 4/27/2022

We had a long conversation about how to add audio to give auditory feedback for answering the survey questions. Se A and Rachel talked to Elizabeth about trouble with touch designer and she suggested trying to manually add these sounds. We shifted gears and decided to manually pick short song excerpts for the questions. We were then able to figure out how to add audio on figma as auditory feedback for the onboarding. We also felt it didn't flow very nicely if there were random noises after each question so we changed our idea to have a short song melody play once all of the questions for that specific color were answered. Using Anima, a Sigma plugin, we were then able to trigger sound when the prototype switched to the color frame.

Sounds for RED

Sounds for BLUE

Sounds for GREEN

Sounds for BLACK

Rachel also figured out how to add animation to a Figma frame to make the Frame more reactive, using the Motion plugin.

4/28/2022

Rachel revisited the everyday UI on Figma. First, we want people to reflect on how they are feeling so they are prompted with how they feel that day. This will act as a label for the day and prompt self reflection. Next, they would upload the colors they are wearing. From this the app will calculate a song. The user were then be asked to confirm or retry. If they retry, they will return to the color upload page, but with the ability to edit the different scales of how that color correlates with feeling and song characteristics. Once adjusted, the app will generate a new song. Upon confirming, the song will be named song of the day. From there, a user may add the song to their playlist. They can then review their "everyday log" that records each day's colors, feelings, and song. Or they can go to the social section and look at what their friends are feeling, their colors, and their song of the day.

Rachel also was thinking about how to share our concept in a brief concept video and sketched out a rough storyboard to help map out scenes and ask questions. Se A and Rachel would work on the UI and video over the weekend, while Michael worked on the demo screen.

Michael was completing the quiz part of the demo at this point implementing a final recommendation, sound based on CSS audio button features for the RGBW screens, and moved onto polishing the live display for touchscreen. An additional "mouse fluid" feature was added to give the display some distortion and hue rotation effects, and additional 3D paneling was added around the webcam display to make it feel more interactive.

Se A created an after effects animation on top of the mirror to show the visualizations that appear automatically as soon as the user opens the app and hears a song. The top and bottom audio bars represents the colors the user is wearing to enhance the experience.

4/29/22-5/2/2022

Everyone continued to make progress.

Rachel updated and added to the figma UI.

The social button would allow users to view how their friends feel, what colors they are wearing, and what their song of the day is. This can then act as a conversation starter where people can bond over music and how they feel, and continue to discover new music based on conversations with others as well.

The everyday log button would lead the user to a screen that has logged all their past days on the app. This allows a user to revisit past days and songs and if they want to they can connect to Spotify from there. The "Jill" page is a sample of what a friends page could look like when you click on Jill from the "social" wireframe. The "You" page is an example of clicking on one of your everyday log songs to look at the details.

On 5/2, Se A and Rachel met to take footage for a brief concept video. We followed pretty closely to the storyboard plan, but adjusted that it would be better if you could simultaneously see the mirror and phone screen at the same time.

5/3/2022

Se A worked on putting the concept video together.

Michael worked on the demo. He made the UI working where visitors will be able to input their names, feelings, colors, answer some questions, and receive a song of the day.

Rachel put together the presentation slides (made gifs of the UI, too) and updated the service diagram and user journey map.

gifs to show UI flows

5/4/2022-5/5/2022

We met to go over what we have and prepare for for the final presentation. We wanted to go over each of the aspects of our presentation and see what we needed should add/improve in the final days.

We also discussed and set up the demo to figure out how the setup would look and what equipment we would need. We connected the demo to the large touch screen in our class room in figured out how to allow people interact with the demo via the touch screen.

The color interaction is very fun to play with on the screen and the UI was pretty intuitive. Just need to the audio!

In regards to what to do for Friday mostly involved finishing touches to the video, demo, and presentation. Michael was going to add the audio and color screens to the demo. Se A was going to put finishing touches to the video. Rachel was going to update the presentation. Michael also said he would bring his speaker and webcam to connect to the touch screen for the demo.

Here the color frames were added... next is finalizing the audio!

(any more process?)

Presentation:

https://docs.google.com/presentation/d/1yFfahX85xtCkZNzuKZcOgzreHsCvRBuBfLaHL2anqxg/edit?usp=sharing

Video:

Demo:

Reflections and Takeaways:

Michael:

I quite enjoyed working on this project as it was a continuation of exploration for the senses, a topic we have been focusing on pretty heavily this year. ColorSynth was a creation that resulted from weeks of exploring, pivoting ideas, and trying to figure out a lot of code, and I think it all turned out very good in the end. The interesting concept of Spotify becoming much more of a social media these days manifested itself into us wanting to make a project about it, and what better way of doing it through the morning routine of looking at yourself in the mirror. Much time went into the smallest interactions like how would the color be matched to specific moods, what the style of the app would look like, and how users would even interaction with a screen/mirror, and each iteration pushed ColorSynth towards a direction that we first would have not expected. The project covered a lot of ground that we did not know or expect to be doing which proved to be a challenge in it of itself, but the exploring part of it was fun. Of course I think there were some parts that could be improved on with more time like ColorSynth making a deeper connection with each individual user vs. being something that every one can do, but I think it does a good job of raising the initial question - how can designers work themselves into the manifestation of daily rituals.

Rachel:

I really enjoyed this exploration of audio (and the other senses) in connection to emotion, music, and color and felt we laid a nice foundation that we can continue to work on and build up. I see a lot of potential in continuing to make the UI better and embed more ways to interact with audio like the Color Auditory Index idea. How cool would it be to explore colors you have worn, listen to their melodies, and revisit the songs and emotions associated with them? We spent a lot of time focusing on how to build a relationship/conversation between the app and user, and now I feel there are lot of areas that can continue to grow to make the app more fun and engaging. I'm really glad our project took the direction of becoming more personal to each user and feel this makes the concept that much more special and able to give each person a different, meaningful experience. ColorSynth raises a lot of interesting questions of the senses and how they impact our everyday rituals and routines. It was very interesting to explore how we could impact something like a morning routine to allow people to grow a stronger sense of self and discover through their senses (through what colors they wear, the sounds they hear, how they are feeling, their ability to recognize how they feel, and explore more music both through their own ColorSynth and connecting to their friends). I feel the social aspect is especially exciting as it opens up a conversation about feelings and can help grow an understanding for others as well. I have never worked on an app project before so this overall was a great experience to experiment with building UI and how we could grow a relationship between user and interface. Michael and Se A are awesome, and it was great to work together as a team.

Se A:

I found our project really combined the unique senses of sight, touch, and hearing to create an overall experience that enhances one's daily routine. I believe this concept allowed us to explore how people relate to a color in their own unique way by creating a conversation between the user and the UI. It was nice to keep building upon our original ideas and tweak certain moments to enhance the interactions no matter how big or small. I feel that our addition of having an audio feedback as users fill our their initial survey really hit the mark on what this app would feel like if it were fully created. I. do believe that there are some more additional interactions. we can add to completely flesh out the app UI. This was the first time Rachel and I have worked on a project so heavily focused on the app that it lead us to consider the branding of our concept. It was helpful to think about how Spotify developed their own interface and created an app that doesn't end with just music. They added in a social feature that really made them stand out from the crowd, which helped us think about the way users can interact with each other in our own ColorSynth app. I think our project really helps start a new conversation on what it means to wear specific colors and listen to different types of songs. It adds a new layer and meaning to an everyday activity. It was great to work with Rachel and Michael since we all divided up the work and completed our individual tasks for this project.

Comments