Audio-Based Mixed-Reality Experiences

- ezhao27

- Mar 29, 2022

- 8 min read

Updated: Apr 30, 2022

Jiaqi Wang, Eric Zhao

3-29 // Initial Exploration

Towards a Framework for Designing Playful Gustosonic Experiences:

In class, we briefly discussed iScream!, a gustosonic experience involving an ice cream cone that made a variety of sounds whenever someone starts eating it. Initially, we found this concept hilarious and didn't think of it much further than a fun experiment combining an eating experience with an unexpected audio element. However, reading the paper made us also realize that there are many paths of potential with this idea and that thinking about experiences in general can be as detailed as you want to explore.

The researchers behind iScream! mention various kinds of participant responses to eating the ice cream, noting it "shifted eating to the foreground of attention", "facilitated transition into a fantasy world", and "provided additional eating rewards". Even though eating is a universal experience, it was interesting to note how almost every participant had a unique take on the experience, showing that such a simple concept can be interpreted in many ways. Additionally, the researchers noted how people's eating experiences changed as the ice cream melted and dripped over time, facilitating new interactions with their gustosonic system.

When thinking about our own project, if we choose to augment an existing human experience or interaction, it will be useful to take into consideration all the nuances involved in said experience, as they open the door to new interaction possibilities and increase the potential of our final ideas.

Toward Understanding Playful Beverage-based Gustosonic Experiences

Another case study for a gustosonic experience design is called Sonic Straws. Similar to iScream! , Sonic Straws is a beverages-based gustison system that supports the experience of playful personalized sound via drinking beverages through straws. It makes sense to me to reimagine or drinking and eating could be playful and social since these two activities have always been the way humans tie to each other to make connections since the hunter-gatherer age. Eating is one of the most universal staples of community and life, while drinking recently became the new socialization vehicle among young people with the prevalence of boba. Grabbing boba together is more than satisfying people’s need for hydration but also a way to spend quality time with each other. Given the assumption that the goal behind drinking is to socialize, designing for playfulness is actually more important than it appears. It is no secret that taste plays a pivotal role in empowering the drinking experience, but from the reading, I learned that all the other senses, emotions, and memory systems are engaged with taste, and the sonic experience is especially powerful in shaping the drinking experience.

From the research paper, I found interesting findings that might also apply to my project:

Users might disengage with a novel system after the initial excitement diminishes. They need richer sound feedback that is constantly evolving.

Leave room for unintended function. The research found that users start using the sonic straw as a way for self-expression, which is not intended but the design is flexible enough to allow people to use it in the way they wanted.

Think about how interaction solo would be different from collective interaction.

Allow personalization to build emotional durability.

3-30 // Precedent Research + Brainstorming

We categorized our precedent research into 3 main directions.

The Action we chose: Longboard Dancing

We chose longboard dancing as the main action to design for because both of us are a big fan of skateboarding/long-boarding. Also, longboard dancing is by nature rhythmic, which seems to be an appropriate domain for this project.

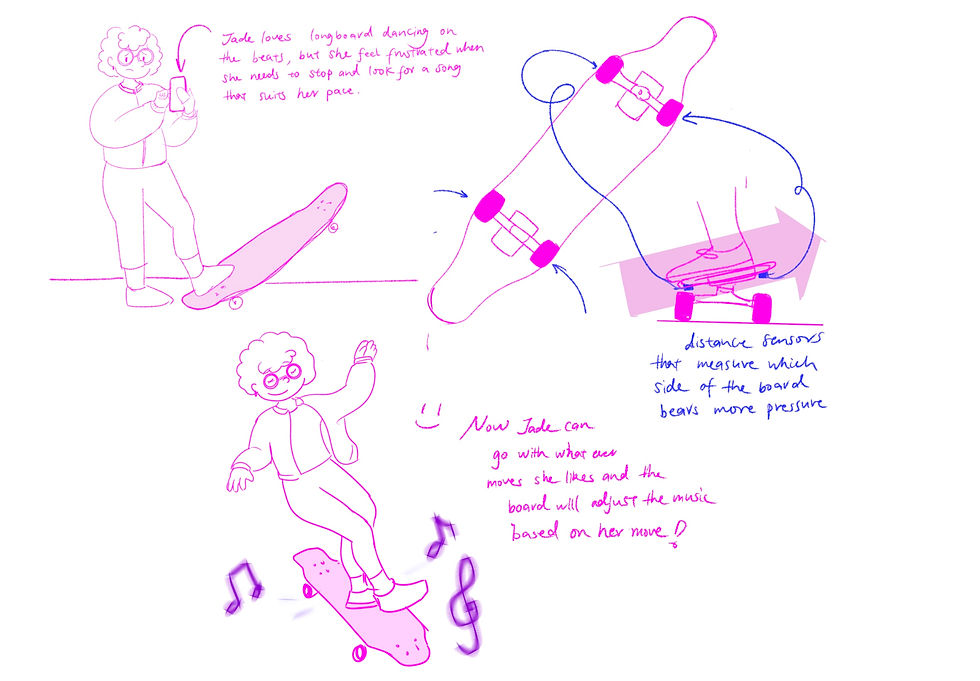

Storyboards/Initial Concept

Our ideas for a mixed reality interface involving longboard dancing were either 1) To create a behavior change or 2) Augment the longboard as a tool of expression. In more depth, we thought about the longboard either being able to learn about a dancer's style and adapting music to match it, or a way to learn dance moves and gamify longboard dancing.

However, we decided to go with the second route, an instrument of expression, since trying to force a user to adapt to a very specific use case (either learning or gamifying) of longboard dancing could overshadow the fun of longboard dancing in the first place and make the overall experience harder to get engaged into. Because of this, we settled on the below idea: using a longboard as an audio controller that manipulates music based on tilt, speed, rhythm of footsteps, and other aspects of dancing.

Case Study Part II

We conducted extensive research but we weren't able to find a similar system. The closest to what we imagine is this ESK8 Sport LED Strip Controller that changes the color and mode of a LED strip based on the speed of the longboard.

The way to control the system is by manipulating the speed, and there are three states to describe the speed: standby (static), Moving (velocity and acceleration are in the same direction), and braking state (velocity and acceleration are in the opposite direction). It is useful to reference how they mapped different states to different outputs.

A difference between this accessory and our longboard controller is that the LED strips are designed to work with an electric skateboard, and draw data straight from the board's speed controller. On the other hand, we are working with a non-powered longboard so we'll have to use different methods to gather live data about the board.

Mapping Sensor Outputs to Sounds

We started brainstorming ways to capture different movements on a longboard using sensors and we came up with a list of variables below:

Variables:

Relative position of left & right feet. (compare to each other or compare to the head & tail of the longboard)

Direction of feet relative to the board orientation.

Speed

Body orientation relative to the board position

Environment factors

Board tilt

...and then, we selected a few that is feasible given the sensors we can access and separate them into two categories: Analog and Digital:

Analog sensors (range)

Board tilt

Body orientation

Speed

Digital Sensors (0-1)

Footsteps (timing)

Footsteps (position on board)

4-05 // Sound Design and Arduino Prototyping

Sound Design

We started looking into sound design. Below is a collection of sound samples:

Controlling speed and volume with Max/MSP

Longboard Sensor Prototyping

We focused in gathering tilt angle and speed data from the longboard initially, and planned to add in a pressure sensor map to identify foot placement on the board at a later stage. Here is us getting tilt angle data from an accelerometer taped to the board:

Earlier prototypes were made using Arduino, but we moved to the ESP32 since it comes with onboard Bluetooth, which will come in handy since we need the longboard to wirelessly transmit data. In addition, it is more compact than an Arduino Uno and has more ports when we add a pressure sensor array. Below is a demo of measuring wheel rpm:

Next steps will be a switch to battery power and setting up a Bluetooth connection between the board and our laptops.

4-10 // Combining Sound and Sensors

Untethering the Longboard

With the addition of a LiPo battery, we could finally use Bluetooth to transmit data instead of having the board wired to our laptops. Everything is still taped down roughly at this point, so no riding it around quite yet!

Next steps are to design an enclosure to protect the internals and solder parts together for stability so we can ride the board safely.

4-11// Feet Position Capture 1st attempt

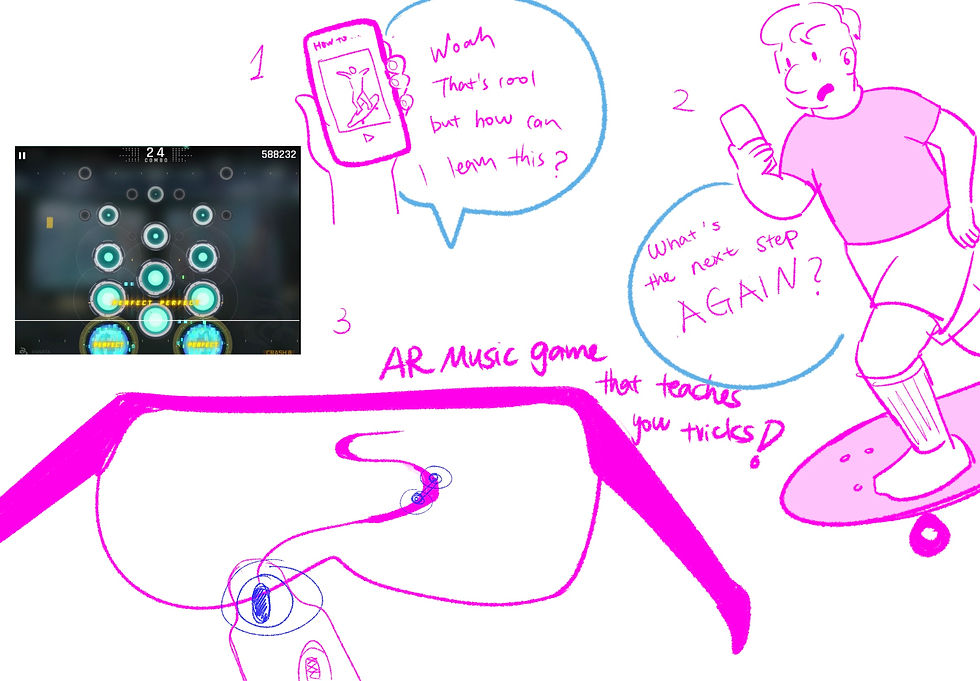

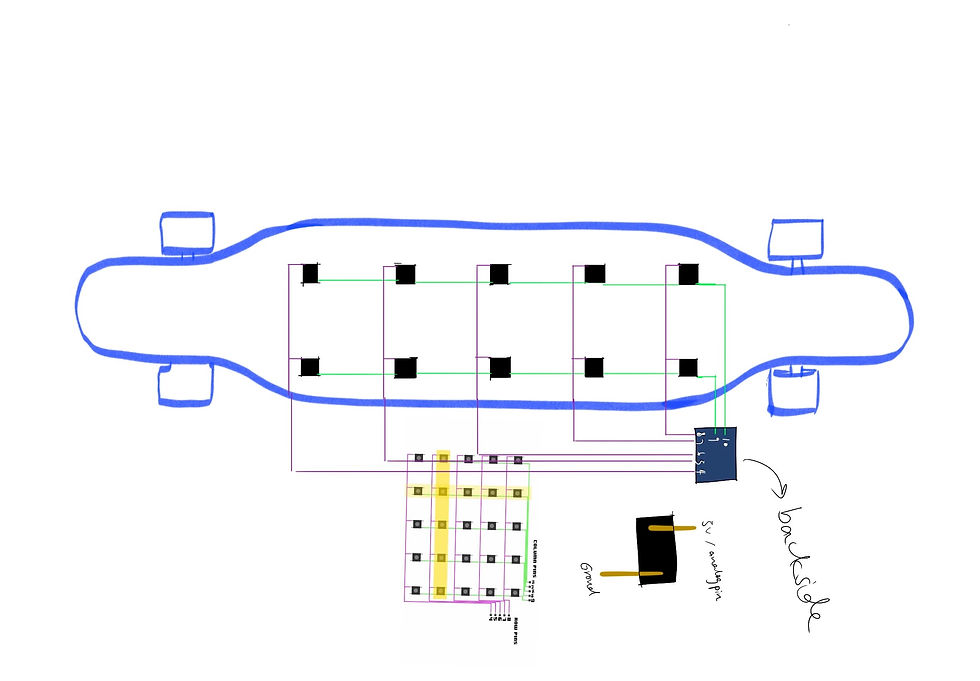

After watching a ton of tutorials of how velostat works, we sketched out a basic wiring diagram of how to connect the sensors and arrange them on a grid to reduce the amount of pins needed.

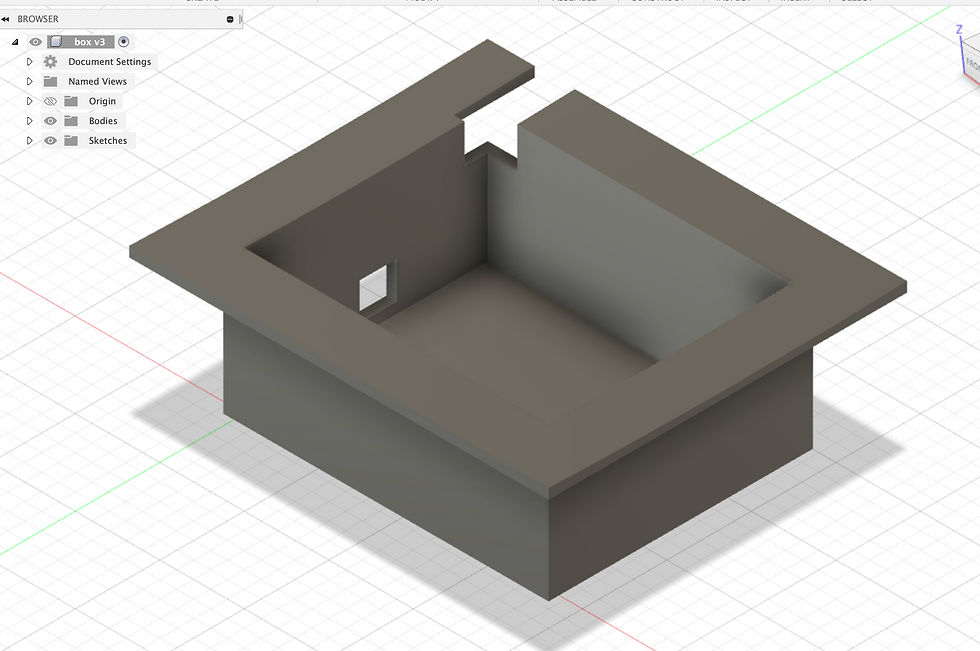

Enclosure Design

We started off designing the case that fits all the component tightly without leaving much space in-between to keep everything secure.

As we realize that the wiring of the sensors still needs further adjustments as we go, we moved away from the compact design to a more accommodating and flexible one.

Max MSP Experimentation

Yay! We connected arduino to Max through the cable and here is a demo of how the speed data is sonified 👇:

However, When we connect Max to the serial monitor while the board is connected via Bluetooth, it stops transmitting data for some reason. Therefore, we switched back to a wired mode to test out Max .

4-17 // Linking Dances to our Concept

Analyzing Longboard Dances

Having finalized our inputs of speed, board tilt, and foot position of the board, we started analyzing longboard dances to explain our interaction, starting from basic carving up to dances that could reach the limits of our prototype.

Velostat Sensor Prototyping

With two out of three sensors working, it was time to start experimenting with velostat to make pressure sensors we could stick onto the surface of the longboard. Here are a couple of them: we learned that the conductive wires between the velostat have to overlap for the sensor to work. The longboard will have more and larger versions of these sensors, hopefully triggering sound effects or notes on a keyboard.

4-21 //Sound Design for Speed Data

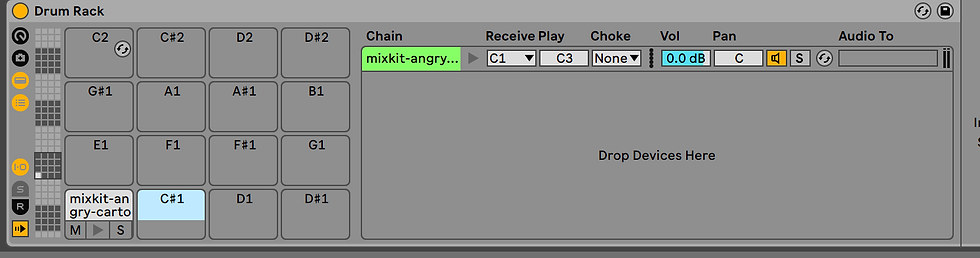

As we watched more youtube tutorials, we realized how using a different software called Ableton could enrich the sound effect so much more. Because we were very new to this software, the exploration phase took longer than expected, but it was really rewarding knowing what we can accomplish with Max and Ableton together. p.s Max is actually built in Ableton.

In this iteration, we built a sequencing beat generator modulated by speed data. Although the Max patcher itself only generates a series of MIDI notes based on the placement of the bars, Ableton added an "underwater"-feeling feature to the otherwise boring sound.

In another iteration, we made a sampling instrument that loops a short clip of audio at the speed of the wheel speed, and we added ambient echo & robot voice effect to add more texture and randomness to the sound.

4-26 // Crucial Reframing

Longboard for Music Production

Up to this point, our interaction was framed around helping longboard dancers express themselves better through an augmented sonic element through their longboard. However, it wasn't clear what exactly the effects would be and what generated music would sound like through dances, or if it anyone could recognize it as music.

However, we went back to our inspiration of experimental music creation devices, such as Imogen Heap's mi.mu gloves or even Oddball (a bouncy ball drumpad), and realized there was huge potential with adding music creation and musicians to our target users.

Using MIDI

MIDI is a decades-old protocol for sending messages over multiple channels to a computer, and is still widely used today for music production in keyboards, drumpads, and other electronic music instruments.

Instead of parsing serial data through Max, we are switching to MIDI as it makes more sense with our application. MIDI is designed for several channels of input, versus single line printing through the serial monitor. Additionally, once a microcontroller is programmed to be a MIDI device, any DAW / music creation software can easily interface with it and the outputs of each sensor can be set to endless parameters within the software.

Here's a test of our velostat pads being used as drumpads through a bluetooth MIDI library running on an ESP32:

4-25 //Presentation Outline

As we started thinking about how to structure the final presentation, we realized that it would be hard to understand the small and big picture of our longboard sonic experience design without solid examples, so we decided on a basic framework like this:

Start with introducing the 3 elements individually: What are the inputs/outputs

Breakdown the composite sound of simple moves: Start with the SKIIER that only involves tilt and 2 feet position change.

3. Breakdown the composite sound of complex moves: for each step (1-4) play the sound resulted from the board tilt only 👉🏻 the sound resulted from the feet position only 👉🏻 play the composite sound of both together.

Future Steps:

Culture recognition?

Is it possible to recognize the specific tricks through ML? For example, if the sensors detect a certain combination of movements that match with an established trick (like Peter Pan, or cross steps...), then it will change the instrument or add a special effect in addition to the auto generated sounds. For example, maybe a "Korean Salsa" would sound like traditional Korean instrument, whereas the "Thai Massage" would sound like Thai music.

Customization?

The other day we were joking about making a Daniel-catchphrase board, or a cat-meow board by mapping MIDI notes to imported sound samples.

4-28 // Sensor Grid Wiring

Before we fully implemented the MIDI code, we made this Max patch that triggers different sound sample audio based on serial data.

Later on, with the serial-to-MIDI transcoding successfully implemented, we move our workspace to Ableton, which can easily handle the MIDI data and play the corresponding sound automatically.

Comments