Intelligence in Environments - Rachel Legg

- rlegg1

- Jan 25, 2022

- 25 min read

Updated: Mar 24, 2022

1/23/2022

Reflection on "Molly Steenson on AI Impacts Design" video

I found Steenson's talk very informative on the history of AI, along with its strong relationship to both design and architecture. I really enjoyed learning about Christopher Alexander's work on following and breaking down problems into patterns that can then solved with design. It was also vey cool that people later on have taken his theory and made completely new technology and approaches from his initial ideas. With a lot of the examples of early AI Steenson shared, it was exciting to see how these early experiment have transformed into technology we use daily. For example, the Aspen Movie Map (1977-1980) allowed people to walk down the street in Aspen, very similar to how Google Earth works today (but on a much large scale). I really had no idea how long artificial intelligence had been around, and I liked being able to see how far humans have transformed these ideas into the technology we have today. And it is very exciting to consider what AI could be in the future!

Reflection on the "Anatomy of AI" by Kate Crawford and Vladan Joler

The Alexa creates small moments of convenience, meanwhile it requires a huge network of resources. This article shares that the Echo user is a consumer, resource, worker, and part of the product. The AI can't function without the human as Alexa constantly trains to hear, interpret, and act better for the user. (I found this very useful for thinking about our project in regards to sensors and how the Alexa translates this data.) Yet, the Alexa is outside the user's control besides its digital and physical interfaces. You can't open the sleek surface or repair it on your own, and its real complexity lays much deeper in a huge network of systems, including humans labor, natural resource excavation, and how algorithms work across networks. Crawford and Joler's insight in the footprint of an Alexa was incredible and kind of nerve-wracking. To think about every device connecting back to the earth like the lithium from the Salar and all the people who had to mine and manufacture (Sierpinski's Triangle) is just a start to this complexity. It is amazing how all of this is connected to asking "Hey Alexa, what time is it?". I'm curious how this complex system below the Echo will inform/transform AI in the future. Is it better to simplify or complicate even more?

Reflection on "Enchanted Objects: Design, Human Desire, and the Internet of Things" by David Rose and his TED talk

In "Enchanted Objects: Design, Human Desire, and the Internet of Things," David Rose brought up people wanting to create "quietude" and escape the chaos of daily life. This creates an opportunity to calm environments and making life simpler through advanced technology. It is interesting because instead, objects are made to interrupt and encourage multi-tasking. This is something to consider for how our projects work with an environment (trying to make the technology seamless within the environment). It also was helpful to learn about "hackable objects" and how many parties/networks are able to program and work with each other.

I really liked David Rose's TED talk and his approach to objects. He seamlessly connects ordinary objects with amazing capabilities that make life a little bit better. The pill bottle that reminds you to take your medication or the Energy Joule that shares how much energy you are using both are successful ways of using data to change behavior by embedding information in the right places. I liked how he concluded by emphasizing how making objects more tangible, embedded, and still humanistic better connects people to interfaces. As someone who isn't super tech-y, I like how he believes in making technology graspable to everyone and making normal interactions a little bit magical! I think this insight is especially useful when thinking about how people will perceive and grasp our own project.

Research Questions

1) How can AI make people aware of their movements through a space? How can motion be translated into structure(s) that connects to people within a space?

2) In a shared work environment like TCS, how can AI aid people in their work or help create a more comfortable work environment? Either for easing stress or assisting in tasks.

3) How can AI and this data support people (students) studying and work? In what ways can it help track and manage time for individuals.

4) How do you make the AI more personal in a public space?

5) How can AI be used to make shared work spaces more sustainable or ingrain sustainable habits in students/visitors/etc?

5) How can this data be shared in a fun, engaging way?

6) What is the best form of presenting this solution?

Example 1 | Herman Miller Live Platform

Collecting data to personalize workspaces and adjust furniture in a workspace environment. Making a space comfortable and flexible.

"A new digital platform designed to personalize and optimize the office for the people who use it. More than sticking sensors on furniture, Live OS offers people and their organizations unprecedented insight into what actually goes on in the office, and a chance to use real data to drive smarter decisions. People will suddenly have the ability to personalize their workspaces, book rooms on the fly, and digitally connect to chairs and tables for a more ergonomic day at the office."

Example 2 | Daniel Rozin's Interactive Art

Daniel Rozin creates interactive art that uses random objects, sensors, and computing that interact with viewers. I love the inventiveness and how it engages visitors.

Example 3 | Timeular Tracker

Measures what you have been doing all day and how much time you spend on tasks. The resulting data helps identify ways to increase productivity. Interesting to think about how people engage with this tracker and its form.

"The Timeular Tracker allows everyone to improve their time management one gesture at a time."

Example 4 | Hourglass Pendent

This hourglass pendent light affects mood, ambience, and the comfort of space as people work.

"This conference room pendant lamp subtly tracks the passage of time and encourages different types of conversation over the course of a meeting. It starts by casting blue light upward for blue-sky generative energetic thinking, then gradually descends and shifts to warm downward light to subtly motivate more convergent, sequential thinking at the end of the hour. I collaborated with architecture student Sam Parsons for this work."

Initial Sketches of Interaction with Data of a Space in a Shared Work Environment

Initial Idea 1:

I like the idea of a "wall with spinning objects that move in the direction you are going (left to right) as you pass by. Similar to a pinwheel that moves one way or the other, depending on the wind. It would be interactive and use the motion sensor data to engage with people in TCS. I think it could have a rain-like sound that adds comfort to the room to support a working space.

Initial Idea 2:

Next, I thought it would be interesting to create a "study buddy". This would be some kind of shape/form that would recognize a person's presence, bring a comforting light/adjust lighting in the room, let you know when you need a break/stretch (maybe play a melody?), and help track time.

A Hybrid:

I also thought about what if these two were combined so you walk in and a structure greets you with movement as it recognizes a person in its space. It would follow your motion and similar to the study buddy, adjust the lighting, tell you when to break, track time, etc.

1/26/2022

Reflection on "Data Materiality Episode 4: Yanni Loukissas on Understanding and Designing Data Settings"

I found it very interesting how Yanni's expertise in architecture and ethnography led him to working with data, and I felt these aspects of his interests help him a lot in discovering and understanding about a place for how he can then use data. His insight to take a closer look by talking to people and breaking down what this data actually means to places was very useful when thinking about our own project. I think by going to TCS and having a better understanding of all the spaces and what people look for out of the building will give even more pointers into what direction to take with our initial ideas.

Reflection on "IoT Data in the Home: Observing Entanglements and Drawing New Encounters"

I loved reading about the 5 different themes and the 5 concepts that Audrey Desjardins and her team came up with as a solution for each problem space. All were clever and totally changed the way you would think about the data in your home. For example, to break down the fear of what IoT does with your data, they developed "Data Epics" where they know the data is sent to fiction writers to create stories. This idea not only changes the mindset of how people think about their data being collected, but could change behavior as well as people know they could affect a writer's process somewhere else. I also felt, this highlights trying to be transparent with data so people know how their information is being used. In this article, all these concepts challenge the way people understand data, but in very different ways. By being able to track how long a task takes or being able to visualize the data being collected in a space, these designs are creating a relationship to spaces and to the people themselves. I feel this is important to note for our project. We can pick specific sensors/data and completely transform how people understand and interact within TCS.

Reflection on "We Read that Paper that Forced Tinnit Gebru out of Google. Here's what it says."

I felt my biggest takeaway from this article was the transparency (or lack there of) with AI in large companies. The paper revealed issues with bias and energy efficiency. I was actually very surprised to see the graph portraying CO2 emmissions and the amount connected to the neural architecture search. Responding to this paper, Google's Head stated lots of relevant information about these topics had been ignored, even though there was extensive information about the work already done in these areas, included in the paper. It is hard to know what is the truth, but it seems to put fear and more of a negative effect around moving forward with AI. I think similar to the last reading, there just needs to be more transparency in what companies use data for and how these can have effects on people and our planet. That also can help create more answers to how to move forward.

Analysis of Intriguing System

Daniel Rozin's Work

I was very intrigued by Daniel Rozin and his artwork. His artwork reflects people's movement and presence into the movement of things and in a lot of ways holds an excitement and awe. His work inspired my ideas as I also want our project to be responsive, alive and connecting motion to people. I also enjoy that it has a sound element to air with the interactive art.

This piece commissioned for Nespresso (Plaugic)

Each tile in one of his pieces is attached to a motor. "The motors are designed as a circuit that responds to 52 controllers and an algorithm. There are four passive infrared sensors attached to the piece, like the kind in a security camera, that can detect movement from heat energy as a person walks by." (Plaugic)

(Plaugic)

His work uses a random piece (the part you see) connected to a motor. These motors on a circuit that responds to a controller and an algorithm. They then respond to infrared sensors that detect movement/form and react, leading to this magical experience. (The infrared sensors rely on heat, detecting people's presence.)

Group Update

Eric, Alison, and I had similar interests and decided to team up for this project. We met and had a brainstorm of which direction to take. We found we were interested in objects who communicate with each other (invisible roommates), responsive, live environment / connecting motion to people (like pinwheel, daniel rozin, etc.), subtle, ambient nudges as initial points.

This narrowed down to:

1. a form that picks up on motion (use of motion sensor data) and

2. an ambient reaction occurs that catches the users’ attention

(what if there is an add on that you own that add personal preferences)

We also then talked about long term + short term data collection and how that could affect the interaction within the environment. We thought this could be like a forest or garden that is initiated by the movement of one person and added to a larger collection of data that people can observe over time.

We chose these questions to guide us as we continue with these idea.

Storyboard

We decided to all create our own storyboards so that then we would have more ideas to narrow down our approach to this project.

We decided that this interaction would mostly focus on the motion data (maybe temperature, too?) to determine where people are and for how long. Our main stakeholder would be for students going to TCS to work/study.

For my first storyboard, it displays an orb that lights up when it senses a person. When a person sits down, a seed appears on the orb. Over the time that person sits there, the seed transforms into a plant and becomes more intricate over time. When the person stands to leave, the orb will glow and the same glow will appear on the visual art screen (on the wall in the room) where the flower will appear. This visual art screen will portray the data of people spending time in TCS. This board adds a nice serenity to the room, while also showing every one's work and time spent in TCS.

My next storyboard takes a similar orb/form approach where the orb blink when you approach an available seat like its greeting and happy to see you. Over time the color of the orb would change, based on how long you work in that space. After an hour or so, the orb would alert you to take a break. I also thought it would be interesting if the was a personal element piece that you kept with yourself and held your personal preferences to studying and how you want the study buddy orb to interact with you (this would be an optional element for the student if they wanted to invest or not). For example, maybe the orb alerts you when it has been 30 minutes and plays your favorite toon. When you leave, just take your piece with you and the orb will say goodbye. This personal piece would save your study data so you can improve studying habits and know how much time you spent working.

I think another thing to think about is if you get up (maybe to print or go to the bathroom). Somehow including a pause button so that your session doesn't end for either of these ideas.

1/30/2022

Reflection on "Designing the Behavior of Interactive Objects"

Personality can be used to define interactive objects and make the user experience wit objects and data better. I felt this reading is very helpful in thinking about the personality of our project and how we want people to feel when interacting with our system. I really liked hearing about the use of metaphors and how they can be used to help people grasp a concept and how to interaction with things. These metaphors carry meaning even when things are intangible and help convey personality through the simple meaning of words. I also was intrigued about personality depending on stereotypes as this is consistent behavior that anyone is ale to recognize. Being able to read the Sofa-bot case study and how the personality of the prototype was defined was pretty interesting and complicated. From this case study, I took away that its important to build a relationship with objects for people to feel comfortable and have a grasp with how to interact with a system. I think this is important to keep in mind moving forward in this project.

Reflection on "Data Imaginaries: Between Home, People, and Technology"

I felt this video posed a lot of great questions about the relationships of data (and its representation) and showed some nice examples of products that show different perspectives to thinking about data. Out of the three products, I especially found the "Soft Fading" concept very interesting. It used the sun to show how over time a piece of cloth can change. It was very interesting to see how the expectation of the faded cloth was completely different to the faded patterns. There are many components that you can't account for, like moving the mechanism or a shadow on another object, that this mechanism can them exhibit from the cloth fading. It is interesting to think about something organic like cloth revealing a history of a data (but if you think about everything shows a history like scars or tree rings). She gave a really nice insight that data engagement and encounters don't need to be about productivity or functionality, but can just simply reveal data in interesting ways and show what it's like to be with data.

Reflection on "Top 5 Learnings for Visualizing Data in AR"

This reading was pretty short, but gave some good notes about AR. Humans live in 3D worlds, and AR creates a comfortable/natural interaction with technology. I agree with Sadowski that interactive AR experiences are fun and they hold a lot of possibilities with bodily interaction and the senses as they immerse you into an environment. They also can show data in interesting ways that can change the meaning of environments and how people see them (and their data being collected).

Analysis of Interesting System

WWF Forests App

"Using these cutting-edge technology features provided by Apple, augmented reality tools help WWF protect forests by connecting us to the natural world in vivid, evocative detail and showing us that our actions have real impacts on forests, no matter how far away they may seem."

This app uses AR to put forests right into your living room. It was made in partnership with Assemblr.

It has different "buttons" that reveal information about different elements of habits that are key to ecosystems' success. There are different forest "chapters" as well to change up the AR and allow you to learn more about forests in different situations.

I found this AR experience compelling for how to immerse people into a natural environment within a building space. While we are planning on connecting our flower/garden visualizations to data, I think this a nice example to reference when considering how our data garden could use AR. I also like this storytelling element and am wondering how we can show this over time accumulation of data through storytelling to people visiting TCS.

Three Personality Traits for Our Project

Whimsical

Gentle

Encaging

Updated Storyboard

Alison updated our storyboard to show our updated concept.

Update on Process

Rough Prototype

I created a gif to show a rough idea of the growth of a plant within an orb form.

We each did some rough prototyping of what this data experience could look like. Eric and Alison did some more experimenting with AR and plants, and created a visualization of what it could like for a plant to grow within an orb like shape.

2/2/2022

Reflection on Giorgia Lupi's Talk

I found all of Lupi's projects very interesting and engaging. I think her "Dear Data" project was a very fun, different way to think about data from a very human perspective. These hand made codes to form data makes symbols and numbers so much more meaningful and send the receiver looking for true meaning in the letter. I really liked seeing her process for the fashion patterns. It was amazing to see not only how she made her own data, but then transformed it into a fun creative visual that means a lot more than just some shapes. I also loved how she shared the sort of legend that dissects each pattern and even designed bags so costumers can keep and sort through her work if they want. She creates a message and makes the clothing so much more through her use of data which is fascinating. I really enjoyed this talk as she uses data in very different ways that I probably would never have considered were possibilities (in fashion, letters, and to teach about microplastics). I thinking data in these surprising ways is something to keep in mind in trying to think of ways to make the data of TCS (or other project) surprising and completely out of the box.

Values to Include After Speaking with Isha

I think our group sees awareness and empathy being key values for our project. We want people working in TCS to gain an awareness of their data being collected through this subtle interaction. And we don't want this to be seen negatively, but interesting and engaging. And hopefully people will enjoy it and be happy to come back and interact with the interaction again. We also see empathy being important as the form would be a sort of friendly presence while you are working and then connects you to a community of people and data. This reveals you are not alone in your pursuit of work and success. Overall, we hope this will make people more curious and aware of themselves and their data within TCS.

Things to Work On

We talked about a lot. While sticking with our concept, there are a lot of directions/forms it can take. I think the biggest thing we are trying to figure out is how exactly we show something growing. Do we show something in the process of being made and then the fully rendered plant is revealed when accessing the QR code? Or do you know what the plant looks like from the beginning? We also were considering making some kind of key that would define the plant characteristics. These characteristics would be based on the data which we decided would be motion and sound for now.

Below is a board of many ideas. We wanted to broaden approaches to see if either there are things that have more potential or can point us in the right direction of this plant data interaction.

All these ideas and thoughts raised interesting questions, but still, it was difficult to pin point what to do as we could see merit and issues with all. It also seemed we kept thinking back to our original ideas and how we could make that better.

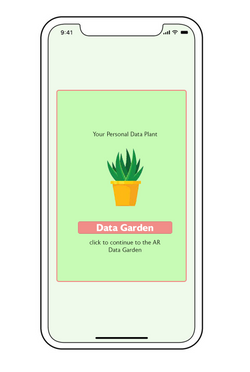

Instead of the plant growing being an orb, this shows the visualization as a basic plant growing on the table... You would be able to see visualizations of data being collected and in a way feeding the plant for it to continue to grow over time. A QR code would then be scanned to reveal the fully rendered plant to represent you and your data from spending time at TCS. The data (motion and sound) would help form what the plant looks like. This would then connect you to the AR Data Garden space so you could explore different plants and their data and be connected to a larger community of your peers and this artificial nature.

Environment

We are deciding between the front/entrance room in TCS as there are many tables and a space people can sit down to work or the second floor seating areas that has a long bar like working space that looks out over this entrance space. We see both spaces having a lot of potential and are going to consider both moving forward until one speaks more to the nature of our interaction.

2/7/2022

Update

Over the weekend, Alison, Eric, and I met three times to try and solidify our ideas and direction. The first 2 sessions consisted of thinking and brainstorming and thinking. It almost felt like going in circles, but in a lot ways, also proved helpful to see which points we were repeating and struggling with the most and what we thought were good directions to take.

We sent many examples, links, and ideas, and it seemed a lot of our thoughts moved back to our initial idea. Monday, we decided to focus on a plant showing growth of some kind in relation to the presence of a person and then connecting this to the network of data of the TCS building. Questions we needed to iron out included what does this form look like? What is the second phase (AR or an app?)? What is the data we are focusing on specifically?

Here are some links I found interesting. This first image is a human motion sculpture that captures the data on movement. Next to this is an image of an art pieces showing the data on music. I thought these visualizations were interesting in regards to the history of an interaction like moving or listening and in some ways relates to us trying to capture the history of people in the TCS building.

Moving forward, we redefined our goals and refocused our attention to our original idea and how it can improve. We also defined that the data we would focus on would be motion and infrared to detect presence.

Dina sent a vimeo (https://vimeo.com/224447550) which definitely supported our direction and sparked some ideas of how our plant could be executed and interact with people and their data.

I liked the idea of creating a simplified lotus flower that opens and closes on the desk in response to someone's presence. This could be a simple beautiful form that brings simple delight and connects to a large system/network of data.

Updated Storyboard

Imagery/Inspiration

2/9/2022

More Ideas

After talking to Dina, we decided it would be interesting to visually show the data being fed to the flower/plant from the mites sensor. This reveals the location of the sensors and makes the collection of data a little more transparent. We also thought these "molecules" of data could be different colors and shapes to differentiate types of data. Above is a rough sketch and visualization to show the AR and the data in relation to the plant.

Breaking Down our Idea using Cards and Questions

2/10/2022

We extended our inspiration board to look at possible forms, movements, and interactions.

Feedback:

Elizabeth and Dina both suggested we start prototyping to work out the wrinkles. Elizabeth showed a lot fo cool ideas and directions for the plant form and gave some suggestions in regards to the AR. Dina showed a very cool concept video about origami birds becoming a part of the Carnage Museum of Natural History experience. It was very cool and I felt resonated with all of us in how we want each person's plant to reveal the data of TCS. I feel like this helped give some direction for creating a fun interaction with AR to explore and learn.

Rough Prototyping

After exploring forms and talking to Elizabeth and Dina, we started rough prototyping to get some ideas of how to move forward for the plant form portion.

We played with paper and foamcore to explore the opening and closing of petals. Eric also played with Arduino and a motor to see how we could potentially move the petals.

2/13/2022

We created a service map outlining all the parts of the plant form and data garden and their roles in order.

Service Map

We also talked about our game plan for the coming week. We wanted to start moving forward in actually creating the plant form and a working AR web platform. We also decided that our plant form would open and close, rather than growing larger. We felt this would be more noticeable and a nice movement for within the space to try and create.

2/15/2022

Process Checkup

We gave a quick presentation about where we are with our project to the class. It seemed our biggest criticism was "why AR?". So while we will move forward with the flower form, we met and discussed/brainstormed what other approaches we could do instead of a AR web on the phone.

We discussed the pros and cons of AR. We then thought about how we could avoid using AR. We came up with the idea of a "magnifying glass" that would already be within the space that people could pick up and look through to see the AR visualization. We also talked about projecting the data visuals which would be visually compelling, but less interactive. These all have pros and cons so next we need to decide in which way to move forward.

2/17/2022

We met with Dina and then Elizabeth to run these ideas by them. Dina gave us a lot of feedback and case studies to look at in regards to the magnifying glass idea. Elizabeth on the other hand seemed to be more for the projection idea. Elizabeth gave us really good feedback that we should revisit the form and its action. The opening of a flower has been done before. How can we find a form that is different? Also, does it have to be an opening motion?

We decided to meet the next day and each bring 5 sketches of what the form and action could be.

2/18/2022

We met and discussed different forms and approaches. We came down to aspiring flower idea that would move with presence or a fanning outward motion. We ultimately picked the fanning outward motion and saw that this could be paired with the looking glass concept to make a seamless product in relation to the TCS building.

2/19/2022-2/21/2022

We worked on prototyping!

We started with form core and paper forms. I started using solid works to map out forms, but shifted and instead decided to use laser cutting for the final form that could make the different sections. We decided there would be 4 petals. The first petal would be the AR looking glass. The middle two would be the 2 movable pieces that react to your presence. And the forth would be a unmovable backing. These would all connect to a base with slits that would connect to the arduino and act as a holder for the petals.

Also, in regards to the mechanism, I looked at toys and how they use slits to have moving parts. While this mechanism is a little more complicated underneath than what we need, I thought this use of rods through long slits was a good reference for how our petals can move back and forth.

As we move forward, we are thinking we will 3D print the base. So we need to perfect the Arduino in relation to the petals. Next, we will begin focusing on the AR element. We are inserting a phone into the front petal to act as the looking glass. In reality, this would be its own product.

2/22/2022-2/24/2022

This week Eric worked on the arduino, Alison created the AR, and I created a brand identity and poster that could be paired in the space.

We talked a lot about what the base would look like. We decided the moving petals would have slits that go all the way across so there is more of a range. There would be a front and back petal to the moving petals that would be connected to the base and not move. They attach to the two petals and help the mechanism work. Then, the front AR petal would have a slit to sit in, but not connected to so that it is removable. We also are planning on having the back of the stand flat so that it can push up against the wall on the table space.

AR update! Alison put together first a video of large particles that could connect the plant form to the mite sensors. This looked very cool, but we thought it would be nice if the particles moved. she then created an animation where the particles wrap around the plant form and reach to the mites sensors.

Although it is a little hard to tell with the white background, here is a particles gif of how the particles disperse from what would be the plant form!

I created a brand, along with a poster that can be paired with the form in the TCS space. I wanted the form language to be both natural, but have a tech like quality. I went with a three leaf line layering as well as three dots to represent the AR portion of the experience. I also wanted the poster to be informative of the different elements of data garden so that if someone needed guidance of how it works they have a reference! It shows that the plant reacts to presence, the front petal is removable, and leads to an AR experience.

Eric worked on the arduino! He first made a mechanism for one petal to be able to move back and forth. Then he started refining to make the petals on each side move outward. This took a lot of thinking of where the petals would be connected (at the bottom or in the center). We decided to connect them at the center as that keeps the forms more compact and would look a lot cleaner and attractive.

Another hurtle we are trying to tackle is approach to material. We were thinking using acrylic, but are trying a variety of ways to treat it. Do we paint it? Or sand it to give a frosted effect? Is it better if it's opaque or transparent? These are questions we are trying to address in curating our final form.

I also put together a quick video of transitioning the screen from black to yellow that we could play on the phone. This transition would also start with someone's presence.

2/26/2022

We met and created a game plan for how to accomplish this project in the coming week.

We played out all the pieces we needed for the form. Saturday we did some practice cuts in cardboard for the petals. Sunday would be to submit 3D print, laser cut the acrylic petals, and glue and sand the petals. Monday would be to paint and assemble. Tuesday for videotaping and editing. And then wednesday would be for refining presentation video and taking nice photos of our form.

Of course things do not always go as planned, but it created a good guideline to help set goals and help us reach the deadline.

Also, I updated the systems diagram to align with our current concept and system.

2/27/2022

Techspark ended up closing so we did the practice cardboard cuts first sunday, before cutting the acrylic.

We were able to create a mockup for the top of the base to see how the petals would fit and then use those measurements for the base.

We then cut the acrylic! With all the pieces, we could then glue the layers of acyclic together to create the thicker petals. After gluing, we sanded so that the petals would have a rounded edge. We used spackle on the edges to help fill the ridges where the difference sheets of acrylic meet.

2/28/2022

Monday, we first sanded the spackle and edges. Then we spray painted the pieces and submitted the 3D print!

I also put together the user journey map to help outline the steps a student would go through in the TCS space, along with their thoughts and feelings at each step.

3/1/2022

We picked up the 3D printed base! and spray painted it white!

We have all the pieces! Once, the base dried we were able to insert the petals and arduino to start assembling our form!

3/2/2022

We met Wednesday morning to film to then put together a demo concept video. Alison and I took many video of the interactions within TCS. We then met in the afternoon to edit it together. We decided we would use the time after spring break to better edit with infographics and text.

First demo video:

Then, we went to the photo room and took some final model photos.

3/3/2022

Today, we got some feedback from both Dina and Elizabeth.

Most had to do with our concept video. Dina suggest animating the logo, adding an outside shot of TCS, and adding text and infographics to talk people through how it works/function. She also suggested playing with base shapes so that it would better integrate with the space.

Elizabeth suggested playing with motion more so that it better integrates with person and technology. So it could wiggle when someone sits down so it is more noticeable and exciting. And then maybe it wiggles again to prompter's person to pick up AR device (with an icon or text to tell person to pick up). Also, just better improving context of video so someone who has no context and grasp the idea. And lastly, better placing particles so that you can tell it is coming from the plant.

We will consider these over break and revisit them when we return and finalize details.

I also received feedback in regards to the user journey map and updated it further. I stated the design opportunity, showed the varying stages of discovery, interaction, and feedback, and changed the feeling portion into a graph to help give a sense of the emotion without relying on words.

3/11/2022

I made a short animation of the logo for the video that shows the movement of the petals and data particles.

3/14/2022

Eric, Alison, and I met to discuss final steps for demo and presentation. This included finalizing slides, creating a prompted animation for helping the transition of picking up the looking glass, taping some more footage to update our video, and adding words to better explain the video.

I made the animation and Alison helped create the slides. Eric and I then planned to meet the next morning to retake some videos at TCS.

3/15/2022-3/17/2022

We took footage and worked together to update the slides and splice together the video. When we took the footage, we attempted to make the plant wiggle before opening, but it was not very smooth so we decided to omit that idea.

On thursday, we went through our slides with Dina and he gave great pointers of trying to add more in context pictures of TCS and better showing the connection between the TCS mites and our form.

Slides: https://docs.google.com/presentation/d/1P-SAIGcTeBd6txAjmkUa0dTD79_N3Z3nRTL9lrAIKvI/edit?usp=sharing

3/20/2022

Reflection

This project has been a great way to learn about how data can transform an experience. It was interesting to try and bridge the gap and make the data collected seem more graspable to visiting students in the space. It also was interesting to consider how we could visualize the data that in a way gameifys the experience and makes it more exciting to the user. The process of concept development to creating the prototype was very fun and rewarding. We went through so many stages that helped create a solid concept around both a subtle response to presence in the space, followed by the AR experience that reveals more about TCS and the relationship between the plant form and the mites sensors in the space. While we explored so many different options, the threads of these ideas remained pretty strong and helped turn into what is now Data Garden. I felt our form turned out really well in regards to abstracting the plant form and its bloom motion. This was where I felt we hit the biggest barrier and need for brainstorming sessions, and the product embodied all these conversations. I also really enjoyed giving Data Garden a brand and logo which brought another fun element to this project and made it feel that much more complete. I think in the future we could go back and further consider how the plant could be better integrated into TCS (may totally a part of the table, rather than in a base) and also having a more seamless transition on the looking glass screen from the animation to the AR experience. Overall, I'm proud of what we were able to accomplish and Data Garden was able to create a very interesting narrative within TCS that grows the relationship between the student working and their environment.

3/24/2022

Following the showcase, I also wanted to comment on the feedback received. The feedback raised a lot of great point in regards to the nature of the project and how we could continue to develop it moving forward. For example, how do we make Data Garden have evolving elements that would make it excited every time someone sits down, rather than it being the same experience every time. I think that is the great think about AR as it wouldn't be difficult to continue to experiment with how the AR could have different features and allow people to continue to develop a relationship with the space over many times of visiting the space. Another visitor gave another great point about not ignoring the unsettling part about you data being collected. It may be a fun experience, but it also could be kind of weird to learn your information/data is being collected.

This project has taught me a lot about awareness and the many different mediums that can help portray an somewhat ungraspable concept like data. I had the opportunity to explore a lot of new areas like AR and different fabrication methods (laser cutting/3D printing). This project was great, and I'm excited for what the next project has in store.

Comments